Cloudnet Cilium 1주차 스터디를 진행하며 정리한 글입니다.

이번 포스팅에서는 Cilium CNI를 설치해보고, 통신 확인하는 포스팅을 해보겠습니다.

Cilium CNI 설치

Cilium을 설치하기 위한 시스템 요구사항을 확인 합니다.

root@k8s-ctr:~# arch

aarch64

root@k8s-ctr:~# uname -r

6.8.0-53-generic

Cilium이 사용하는 eBPF 기능들과 네트워크 기능들이 커널에 실제로 활성화되어 있는지 확인하기 위해 커널 구성 옵션을 활성화합니다.

# [커널 구성 옵션] 기본 요구 사항

grep -E 'CONFIG_BPF|CONFIG_BPF_SYSCALL|CONFIG_NET_CLS_BPF|CONFIG_BPF_JIT|CONFIG_NET_CLS_ACT|CONFIG_NET_SCH_INGRESS|CONFIG_CRYPTO_SHA1|CONFIG_CRYPTO_USER_API_HASH|CONFIG_CGROUPS|CONFIG_CGROUP_BPF|CONFIG_PERF_EVENTS|CONFIG_SCHEDSTATS' /boot/config-$(uname -r)

CONFIG_BPF=y

CONFIG_BPF_SYSCALL=y

CONFIG_BPF_JIT=y

CONFIG_NET_CLS_BPF=m

CONFIG_NET_CLS_ACT=y

CONFIG_NET_SCH_INGRESS=m

CONFIG_CRYPTO_SHA1=y

CONFIG_CRYPTO_USER_API_HASH=m

CONFIG_CGROUPS=y

CONFIG_CGROUP_BPF=y

CONFIG_PERF_EVENTS=y

CONFIG_SCHEDSTATS=y

# [커널 구성 옵션] Requirements for Tunneling and Routing

grep -E 'CONFIG_VXLAN=y|CONFIG_VXLAN=m|CONFIG_GENEVE=y|CONFIG_GENEVE=m|CONFIG_FIB_RULES=y' /boot/config-$(uname -r)

CONFIG_FIB_RULES=y # 커널에 내장됨

CONFIG_VXLAN=m # 모듈로 컴파일됨 → 커널에 로드해서 사용

CONFIG_GENEVE=m # 모듈로 컴파일됨 → 커널에 로드해서 사용

## (참고) 커널 로드

lsmod | grep -E 'vxlan|geneve'

modprobe geneve

lsmod | grep -E 'vxlan|geneve'

# [커널 구성 옵션] Requirements for L7 and FQDN Policies

grep -E 'CONFIG_NETFILTER_XT_TARGET_TPROXY|CONFIG_NETFILTER_XT_TARGET_MARK|CONFIG_NETFILTER_XT_TARGET_CT|CONFIG_NETFILTER_XT_MATCH_MARK|CONFIG_NETFILTER_XT_MATCH_SOCKET' /boot/config-$(uname -r)

CONFIG_NETFILTER_XT_TARGET_CT=m

CONFIG_NETFILTER_XT_TARGET_MARK=m

CONFIG_NETFILTER_XT_TARGET_TPROXY=m

CONFIG_NETFILTER_XT_MATCH_MARK=m

CONFIG_NETFILTER_XT_MATCH_SOCKET=m

...

# [커널 구성 옵션] Requirements for Netkit Device Mode

grep -E 'CONFIG_NETKIT=y|CONFIG_NETKIT=m' /boot/config-$(uname -r)

지난 실습에서 Flannel CNI를 설치했던 것을 삭제해주고, Cilium CNI를 설치해보겠습니다.

root@k8s-ctr:~# helm uninstall -n kube-flannel flannel

root@k8s-ctr:~# helm list -A

release "flannel" uninstalled

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

# 제거 전 확인

ip -c link

brctl show

ip -c route

############

# vnic 제거 #

############

# Flannel은 각 노드에 flannel.1 (VXLAN 터널 인터페이스)와 cni0 (Pod 브리지), veth* (Pod와 연결된 인터페이스)를 생성하기 때문에 잔재 확인

# 잔여 인터페이스 수동 제거

ip link del flannel.1

ip link del cni0

for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i sudo ip link del flannel.1 ; echo; done

for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh -o StrictHostKeyChecking=no vagrant@k8s-$i sudo ip link del cni0 ; echo; done

# 제거 확인

ip -c link

brctl shoW

ip -c route

######################

# 기존 kube-proxy 제거 #

######################

# Flannel이나 kube-proxy가 만든 iptables 규칙 제거

iptables-save | grep -v KUBE | grep -v FLANNEL | iptables-restore

iptables-save

sshpass -p 'vagrant' ssh vagrant@k8s-w1 "sudo iptables-save | grep -v KUBE | grep -v FLANNEL | sudo iptables-restore"

sshpass -p 'vagrant' ssh vagrant@k8s-w1 sudo iptables-save

sshpass -p 'vagrant' ssh vagrant@k8s-w2 "sudo iptables-save | grep -v KUBE | grep -v FLANNEL | sudo iptables-restore"

sshpass -p 'vagrant' ssh vagrant@k8s-w2 sudo iptables-save

kubectl -n kube-system delete ds kube-proxy

kubectl -n kube-system delete cm kube-proxy

# 노드별 파드에 할당되는 IPAM(PodCIDR) 정보 확인

#--allocate-node-cidrs=true 로 설정된 kube-controller-manager에서 CIDR을 자동 할당함

kubectl get nodes -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.podCIDR}{"\n"}{end}'

k8s-ctr 10.244.0.0/24

k8s-w1 10.244.1.0/24

k8s-w2 10.244.2.0/24

Cilium 1.17.5 버전을 helm chart를 통해 설치해보겠습니다.

# helm repo 추가

root@k8s-ctr:~# helm repo add cilium https://helm.cilium.io/

"cilium" has been added to your repositories

# helm 설치

root@k8s-ctr:~# helm install cilium cilium/cilium --version 1.17.5 --namespace kube-system \

--set k8sServiceHost=192.168.10.100 --set k8sServicePort=6443 \

--set kubeProxyReplacement=true \

--set routingMode=native \

--set autoDirectNodeRoutes=true \

--set ipam.mode="cluster-pool" \

--set ipam.operator.clusterPoolIPv4PodCIDRList={"172.20.0.0/16"} \

--set ipv4NativeRoutingCIDR=172.20.0.0/16 \

--set endpointRoutes.enabled=true \

--set installNoConntrackIptablesRules=true \

--set bpf.masquerade=true \

--set ipv6.enabled=false

NAME: cilium

LAST DEPLOYED: Sun Jul 20 02:46:57 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble.

Your release version is 1.17.5.

For any further help, visit https://docs.cilium.io/en/v1.17/gettinghelp

# 확인

root@k8s-ctr:~# helm get values cilium -n kube-system

USER-SUPPLIED VALUES:

autoDirectNodeRoutes: true

bpf:

masquerade: true

endpointRoutes:

enabled: true

installNoConntrackIptablesRules: true

ipam:

mode: cluster-pool

operator:

clusterPoolIPv4PodCIDRList:

- 172.20.0.0/16

ipv4NativeRoutingCIDR: 172.20.0.0/16

ipv6:

enabled: false

k8sServiceHost: 192.168.10.100

k8sServicePort: 6443

kubeProxyReplacement: true

routingMode: native

root@k8s-ctr:~# helm list -A

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

cilium kube-system 1 2025-07-20 02:46:57.07293655 +0900 KST deployed cilium-1.17.5 1.17.5

Every 2.0s: kubectl get pod -A k8s-ctr: Sun Jul 20 02:48:15 2025

NAMESPACE NAME READY STATUS RESTARTS AGE

default curl-pod 1/1 Running 0 3h35m

default webpod-697b545f57-49wg4 1/1 Running 0 3h35m

default webpod-697b545f57-b6fwp 1/1 Running 0 3h35m

kube-system cilium-5w7nq 0/1 Running 0 76s

kube-system cilium-796f7 0/1 Init:5/6 0 77s

kube-system cilium-envoy-2t7xx 0/1 ContainerCreating 0 77s

kube-system cilium-envoy-7v82q 0/1 ContainerCreating 0 77s

kube-system cilium-envoy-wd7zd 0/1 Running 0 77s

kube-system cilium-operator-865bc7f457-nw7vv 0/1 ContainerCreating 0 76s

kube-system cilium-operator-865bc7f457-z5668 0/1 ContainerCreating 0 77s

kube-system cilium-qlkmm 0/1 Running 0 76s

kube-system coredns-674b8bbfcf-f9glh 0/1 Running 6 (92s ago) 5h8m

kube-system coredns-674b8bbfcf-pjcrm 0/1 Running 6 (92s ago) 5h8m

kube-system etcd-k8s-ctr 1/1 Running 0 5h9m

kube-system kube-apiserver-k8s-ctr 1/1 Running 0 5h9m

kube-system kube-controller-manager-k8s-ctr 1/1 Running 0 5h9m

kube-system kube-scheduler-k8s-ctr 1/1 Running 0 5h9m

# 노드에 iptables 확인

# 굉장히 적다.!

root@k8s-ctr:~# iptables -t nat -S

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N CILIUM_OUTPUT_nat

-N CILIUM_POST_nat

-N CILIUM_PRE_nat

-N KUBE-KUBELET-CANARY

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_nat" -j CILIUM_PRE_nat

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_nat" -j CILIUM_OUTPUT_nat

-A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_nat" -j CILIUM_POST_nat

>> node : k8s-w1 <<

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N CILIUM_OUTPUT_nat

-N CILIUM_POST_nat

-N CILIUM_PRE_nat

-N KUBE-KUBELET-CANARY

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_nat" -j CILIUM_PRE_nat

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_nat" -j CILIUM_OUTPUT_nat

-A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_nat" -j CILIUM_POST_nat

>> node : k8s-w2 <<

-P PREROUTING ACCEPT

-P INPUT ACCEPT

-P OUTPUT ACCEPT

-P POSTROUTING ACCEPT

-N CILIUM_OUTPUT_nat

-N CILIUM_POST_nat

-N CILIUM_PRE_nat

-N KUBE-KUBELET-CANARY

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_nat" -j CILIUM_PRE_nat

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_nat" -j CILIUM_OUTPUT_nat

-A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_nat" -j CILIUM_POST_nat

root@k8s-ctr:~# iptables-save

# Generated by iptables-save v1.8.10 (nf_tables) on Sun Jul 20 02:50:07 2025

*mangle

:PREROUTING ACCEPT [139129:432987788]

:INPUT ACCEPT [139129:432987788]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [130025:52036669]

:POSTROUTING ACCEPT [130025:52036669]

:CILIUM_POST_mangle - [0:0]

:CILIUM_PRE_mangle - [0:0]

:KUBE-IPTABLES-HINT - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_mangle" -j CILIUM_PRE_mangle

-A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_mangle" -j CILIUM_POST_mangle

-A CILIUM_PRE_mangle ! -o lo -m socket --transparent -m mark ! --mark 0xe00/0xf00 -m mark ! --mark 0x800/0xf00 -m comment --comment "cilium: any->pod redirect proxied traffic to host proxy" -j MARK --set-xmark 0x200/0xffffffff

-A CILIUM_PRE_mangle -p tcp -m mark --mark 0x49b00200 -m comment --comment "cilium: TPROXY to host cilium-dns-egress proxy" -j TPROXY --on-port 45129 --on-ip 127.0.0.1 --tproxy-mark 0x200/0xffffffff

-A CILIUM_PRE_mangle -p udp -m mark --mark 0x49b00200 -m comment --comment "cilium: TPROXY to host cilium-dns-egress proxy" -j TPROXY --on-port 45129 --on-ip 127.0.0.1 --tproxy-mark 0x200/0xffffffff

COMMIT

# Completed on Sun Jul 20 02:50:07 2025

# Generated by iptables-save v1.8.10 (nf_tables) on Sun Jul 20 02:50:07 2025

*raw

:PREROUTING ACCEPT [0:0]

:OUTPUT ACCEPT [0:0]

:CILIUM_OUTPUT_raw - [0:0]

:CILIUM_PRE_raw - [0:0]

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_raw" -j CILIUM_PRE_raw

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_raw" -j CILIUM_OUTPUT_raw

-A CILIUM_OUTPUT_raw -d 172.20.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -s 172.20.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o lxc+ -m mark --mark 0xa00/0xfffffeff -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o cilium_host -m mark --mark 0xa00/0xfffffeff -m comment --comment "cilium: NOTRACK for proxy return traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o lxc+ -m mark --mark 0x800/0xe00 -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack

-A CILIUM_OUTPUT_raw -o cilium_host -m mark --mark 0x800/0xe00 -m comment --comment "cilium: NOTRACK for L7 proxy upstream traffic" -j CT --notrack

-A CILIUM_PRE_raw -d 172.20.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack

-A CILIUM_PRE_raw -s 172.20.0.0/16 -m comment --comment "cilium: NOTRACK for pod traffic" -j CT --notrack

-A CILIUM_PRE_raw -m mark --mark 0x200/0xf00 -m comment --comment "cilium: NOTRACK for proxy traffic" -j CT --notrack

COMMIT

# Completed on Sun Jul 20 02:50:07 2025

# Generated by iptables-save v1.8.10 (nf_tables) on Sun Jul 20 02:50:07 2025

*filter

:INPUT ACCEPT [139129:432987788]

:FORWARD ACCEPT [0:0]

:OUTPUT ACCEPT [130025:52036669]

:CILIUM_FORWARD - [0:0]

:CILIUM_INPUT - [0:0]

:CILIUM_OUTPUT - [0:0]

:KUBE-FIREWALL - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

-A INPUT -m comment --comment "cilium-feeder: CILIUM_INPUT" -j CILIUM_INPUT

-A INPUT -j KUBE-FIREWALL

-A FORWARD -m comment --comment "cilium-feeder: CILIUM_FORWARD" -j CILIUM_FORWARD

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT" -j CILIUM_OUTPUT

-A OUTPUT -j KUBE-FIREWALL

-A CILIUM_FORWARD -o cilium_host -m comment --comment "cilium: any->cluster on cilium_host forward accept" -j ACCEPT

-A CILIUM_FORWARD -i cilium_host -m comment --comment "cilium: cluster->any on cilium_host forward accept (nodeport)" -j ACCEPT

-A CILIUM_FORWARD -i lxc+ -m comment --comment "cilium: cluster->any on lxc+ forward accept" -j ACCEPT

-A CILIUM_FORWARD -i cilium_net -m comment --comment "cilium: cluster->any on cilium_net forward accept (nodeport)" -j ACCEPT

-A CILIUM_FORWARD -o lxc+ -m comment --comment "cilium: any->cluster on lxc+ forward accept" -j ACCEPT

-A CILIUM_FORWARD -i lxc+ -m comment --comment "cilium: cluster->any on lxc+ forward accept (nodeport)" -j ACCEPT

-A CILIUM_INPUT -m mark --mark 0x200/0xf00 -m comment --comment "cilium: ACCEPT for proxy traffic" -j ACCEPT

-A CILIUM_OUTPUT -m mark --mark 0xa00/0xe00 -m comment --comment "cilium: ACCEPT for proxy traffic" -j ACCEPT

-A CILIUM_OUTPUT -m mark --mark 0x800/0xe00 -m comment --comment "cilium: ACCEPT for l7 proxy upstream traffic" -j ACCEPT

-A CILIUM_OUTPUT -m mark ! --mark 0xe00/0xf00 -m mark ! --mark 0xd00/0xf00 -m mark ! --mark 0x400/0xf00 -m mark ! --mark 0xa00/0xe00 -m mark ! --mark 0x800/0xe00 -m mark ! --mark 0xf00/0xf00 -m comment --comment "cilium: host->any mark as from host" -j MARK --set-xmark 0xc00/0xf00

-A KUBE-FIREWALL ! -s 127.0.0.0/8 -d 127.0.0.0/8 -m comment --comment "block incoming localnet connections" -m conntrack ! --ctstate RELATED,ESTABLISHED,DNAT -j DROP

COMMIT

# Completed on Sun Jul 20 02:50:07 2025

# Generated by iptables-save v1.8.10 (nf_tables) on Sun Jul 20 02:50:07 2025

*nat

:PREROUTING ACCEPT [16:936]

:INPUT ACCEPT [16:936]

:OUTPUT ACCEPT [1991:119726]

:POSTROUTING ACCEPT [1991:119726]

:CILIUM_OUTPUT_nat - [0:0]

:CILIUM_POST_nat - [0:0]

:CILIUM_PRE_nat - [0:0]

:KUBE-KUBELET-CANARY - [0:0]

-A PREROUTING -m comment --comment "cilium-feeder: CILIUM_PRE_nat" -j CILIUM_PRE_nat

-A OUTPUT -m comment --comment "cilium-feeder: CILIUM_OUTPUT_nat" -j CILIUM_OUTPUT_nat

-A POSTROUTING -m comment --comment "cilium-feeder: CILIUM_POST_nat" -j CILIUM_POST_nat

COMMIT

# Completed on Sun Jul 20 02:50:07 2025

# w1, w2도 동일하게 iptables-save 수행

+ 설치 시 지정한 옵션)

| k8sServiceHost=192.168.10.100 | Cilium이 연결할 API 서버의 고정 IP 주소 설정 |

| k8sServicePort=6443 | API 서버 포트 지정 |

| kubeProxyReplacement=true | kube-proxy를 제거하고 Cilium이 eBPF 기반으로 서비스 로드밸런싱 처리 |

| routingMode=native | Overlay 없이 L3 기반 Native Routing 방식 사용 |

| autoDirectNodeRoutes=true | 노드 간 Pod CIDR에 대한 정적 경로를 자동 설정 |

| ipam.mode="cluster-pool" | Cilium이 자체 IP 풀을 관리하는 cluster-pool 방식 설정 |

| ipam.operator.clusterPoolIPv4PodCIDRList={"172.20.0.0/16"} | 클러스터 전체 Pod IP 대역 설정 |

| ipv4NativeRoutingCIDR=172.20.0.0/16 | Native Routing에 사용할 Pod CIDR 대역 설정 |

| endpointRoutes.enabled=true | Pod별 라우팅 경로 설정 활성화 |

| installNoConntrackIptablesRules=true | conntrack iptables 규칙 생성 방지 |

| bpf.masquerade=true | eBPF를 통한 Masquerading 처리 설정 |

| ipv6.enabled=false | IPv6 기능 비활성화 |

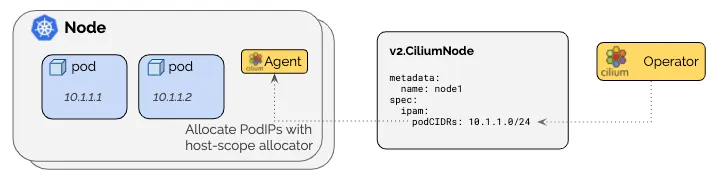

Cilium을 설치하기 전에는 Flannel CNI가 동작하고 있었기 때문에, 각 노드의 Pod CIDR은 다음과 같이 10.244.x.0/24 대역을 사용하고 있었습니다. 그리고 Pod의 IP도 Flannel이 관리하는 대역으로 할당되어 있었습니다.

Cilium을 설치하고 webpod 디플로이먼트를 롤링 업데이트 하자, 새로 생성된 Pod의 IP 대역이 Cilium에서 설정한 Pod CIDR (172.20.0.0/16) 범위로 변경되었음을 확인할 수 있습니다. curl-pod도 재생성하여 확인한 결과 다음과 같이 IP가 할당됩니다.

이는 Cilium이 자체 IPAM을 통해 Pod 네트워크를 관리하고 있으며, 기존 Flannel의 CIDR이 아닌, Cilium 설정에서 정의한 172.20.0.0/16 대역이 실제로 사용되기 시작했다는 것을 의미합니다.

root@k8s-ctr:~# kubectl get nodes -o jsonpath='{range .items[*]}{.metadata.name}{"\t"}{.spec.podCIDR}{"\n"}{end}'

k8s-ctr 10.244.0.0/24

k8s-w1 10.244.1.0/24

k8s-w2 10.244.2.0/24

root@k8s-ctr:~# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 0 3h41m 10.244.0.2 k8s-ctr <none> <none>

webpod-697b545f57-49wg4 1/1 Running 0 3h41m 10.244.1.4 k8s-w1 <none> <none>

webpod-697b545f57-b6fwp 1/1 Running 0 3h41m 10.244.2.2 k8s-w2 <none> <none>

root@k8s-ctr:~# kubectl get ciliumnodes -o json | grep podCIDRs -A2

"podCIDRs": [

"172.20.2.0/24"

],

--

"podCIDRs": [

"172.20.0.0/24"

],

--

"podCIDRs": [

"172.20.1.0/24"

],

root@k8s-ctr:~# kubectl rollout restart deployment webpod

deployment.apps/webpod restarted

root@k8s-ctr:~# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 0 3h42m 10.244.0.2 k8s-ctr <none> <none>

webpod-86cbd476cd-h9z8j 1/1 Running 0 14s 172.20.0.112 k8s-w1 <none> <none>

webpod-86cbd476cd-xmjhs 1/1 Running 0 11s 172.20.1.166 k8s-w2 <none> <none>

root@k8s-ctr:~# kubectl delete pod curl-pod --grace-period=0

pod "curl-pod" deleted

root@k8s-ctr:~# cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: curl-pod

labels:

app: curl

spec:

nodeName: k8s-ctr

containers:

- name: curl

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

pod/curl-pod created

root@k8s-ctr:~# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 0 26s 172.20.2.28 k8s-ctr <none> <none>

webpod-86cbd476cd-h9z8j 1/1 Running 0 70s 172.20.0.112 k8s-w1 <none> <none>

webpod-86cbd476cd-xmjhs 1/1 Running 0 67s 172.20.1.166 k8s-w2 <none> <none>

# 통신 확인

root@k8s-ctr:~# kubectl exec -it curl-pod -- curl webpod | grep Hostname

Hostname: webpod-86cbd476cd-h9z8j

Cilium 설치 확인

Cilium CLI를 설치하여 Cilium 설치가 잘 되었는지 확인해보겠습니다.

root@k8s-ctr:~# CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz >/dev/null 2>&1

tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz

cilium

root@k8s-ctr:~# cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: OK

\__/¯¯\__/ Hubble Relay: disabled

\__/ ClusterMesh: disabled

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

DaemonSet cilium-envoy Desired: 3, Ready: 3/3, Available: 3/3

Deployment cilium-operator Desired: 2, Ready: 2/2, Available: 2/2

Containers: cilium Running: 3

cilium-envoy Running: 3

cilium-operator Running: 2

clustermesh-apiserver

hubble-relay

Cluster Pods: 5/5 managed by Cilium

Helm chart version: 1.17.5

Image versions cilium quay.io/cilium/cilium:v1.17.5@sha256:baf8541723ee0b72d6c489c741c81a6fdc5228940d66cb76ef5ea2ce3c639ea6: 3

cilium-envoy quay.io/cilium/cilium-envoy:v1.32.6-1749271279-0864395884b263913eac200ee2048fd985f8e626@sha256:9f69e290a7ea3d4edf9192acd81694089af048ae0d8a67fb63bd62dc1d72203e: 3

cilium-operator quay.io/cilium/operator-generic:v1.17.5@sha256:f954c97eeb1b47ed67d08cc8fb4108fb829f869373cbb3e698a7f8ef1085b09e: 2

root@k8s-ctr:~# cilium config view

agent-not-ready-taint-key node.cilium.io/agent-not-ready

arping-refresh-period 30s

auto-direct-node-routes true

bpf-distributed-lru false

bpf-events-drop-enabled true

bpf-events-policy-verdict-enabled true

bpf-events-trace-enabled true

bpf-lb-acceleration disabled

bpf-lb-algorithm-annotation false

bpf-lb-external-clusterip false

bpf-lb-map-max 65536

bpf-lb-mode-annotation false

bpf-lb-sock false

bpf-lb-source-range-all-types false

bpf-map-dynamic-size-ratio 0.0025

bpf-policy-map-max 16384

bpf-root /sys/fs/bpf

cgroup-root /run/cilium/cgroupv2

cilium-endpoint-gc-interval 5m0s

cluster-id 0

cluster-name default

cluster-pool-ipv4-cidr 172.20.0.0/16

cluster-pool-ipv4-mask-size 24

clustermesh-enable-endpoint-sync false

clustermesh-enable-mcs-api false

cni-exclusive true

cni-log-file /var/run/cilium/cilium-cni.log

custom-cni-conf false

datapath-mode veth

debug false

debug-verbose

default-lb-service-ipam lbipam

direct-routing-skip-unreachable false

dnsproxy-enable-transparent-mode true

dnsproxy-socket-linger-timeout 10

egress-gateway-reconciliation-trigger-interval 1s

enable-auto-protect-node-port-range true

enable-bpf-clock-probe false

enable-bpf-masquerade true

enable-endpoint-health-checking true

enable-endpoint-lockdown-on-policy-overflow false

enable-endpoint-routes true

enable-experimental-lb false

enable-health-check-loadbalancer-ip false

enable-health-check-nodeport true

enable-health-checking true

enable-hubble true

enable-internal-traffic-policy true

enable-ipv4 true

enable-ipv4-big-tcp false

enable-ipv4-masquerade true

enable-ipv6 false

enable-ipv6-big-tcp false

enable-ipv6-masquerade true

enable-k8s-networkpolicy true

enable-k8s-terminating-endpoint true

enable-l2-neigh-discovery true

enable-l7-proxy true

enable-lb-ipam true

enable-local-redirect-policy false

enable-masquerade-to-route-source false

enable-metrics true

enable-node-selector-labels false

enable-non-default-deny-policies true

enable-policy default

enable-policy-secrets-sync true

enable-runtime-device-detection true

enable-sctp false

enable-source-ip-verification true

enable-svc-source-range-check true

enable-tcx true

enable-vtep false

enable-well-known-identities false

enable-xt-socket-fallback true

envoy-access-log-buffer-size 4096

envoy-base-id 0

envoy-keep-cap-netbindservice false

external-envoy-proxy true

health-check-icmp-failure-threshold 3

http-retry-count 3

hubble-disable-tls false

hubble-export-file-max-backups 5

hubble-export-file-max-size-mb 10

hubble-listen-address :4244

hubble-socket-path /var/run/cilium/hubble.sock

hubble-tls-cert-file /var/lib/cilium/tls/hubble/server.crt

hubble-tls-client-ca-files /var/lib/cilium/tls/hubble/client-ca.crt

hubble-tls-key-file /var/lib/cilium/tls/hubble/server.key

identity-allocation-mode crd

identity-gc-interval 15m0s

identity-heartbeat-timeout 30m0s

install-no-conntrack-iptables-rules true

ipam cluster-pool

ipam-cilium-node-update-rate 15s

iptables-random-fully false

ipv4-native-routing-cidr 172.20.0.0/16

k8s-require-ipv4-pod-cidr false

k8s-require-ipv6-pod-cidr false

kube-proxy-replacement true

kube-proxy-replacement-healthz-bind-address

max-connected-clusters 255

mesh-auth-enabled true

mesh-auth-gc-interval 5m0s

mesh-auth-queue-size 1024

mesh-auth-rotated-identities-queue-size 1024

monitor-aggregation medium

monitor-aggregation-flags all

monitor-aggregation-interval 5s

nat-map-stats-entries 32

nat-map-stats-interval 30s

node-port-bind-protection true

nodeport-addresses

nodes-gc-interval 5m0s

operator-api-serve-addr 127.0.0.1:9234

operator-prometheus-serve-addr :9963

policy-cidr-match-mode

policy-secrets-namespace cilium-secrets

policy-secrets-only-from-secrets-namespace true

preallocate-bpf-maps false

procfs /host/proc

proxy-connect-timeout 2

proxy-idle-timeout-seconds 60

proxy-initial-fetch-timeout 30

proxy-max-concurrent-retries 128

proxy-max-connection-duration-seconds 0

proxy-max-requests-per-connection 0

proxy-xff-num-trusted-hops-egress 0

proxy-xff-num-trusted-hops-ingress 0

remove-cilium-node-taints true

routing-mode native

service-no-backend-response reject

set-cilium-is-up-condition true

set-cilium-node-taints true

synchronize-k8s-nodes true

tofqdns-dns-reject-response-code refused

tofqdns-enable-dns-compression true

tofqdns-endpoint-max-ip-per-hostname 1000

tofqdns-idle-connection-grace-period 0s

tofqdns-max-deferred-connection-deletes 10000

tofqdns-proxy-response-max-delay 100ms

tunnel-protocol vxlan

tunnel-source-port-range 0-0

unmanaged-pod-watcher-interval 15

vtep-cidr

vtep-endpoint

vtep-mac

vtep-mask

write-cni-conf-when-ready /host/etc/cni/net.d/05-cilium.conflist

Cilium CNI가 설치된 이후의 네트워크 구성, 동작 상태, 라우팅, 그리고 eBPF 기반 처리가 제대로 이루어지는지 확인해보겠습니다.

Cilium이 만든 주요 인터페이스 확인

- ip -c addr show cilium_net : Pod IP가 할당되는 인터페이스. 실제 Pod의 IP는 여기서 볼 수 있음

- ip -c addr show cilium_host : Cilium에서 사용하는 호스트 인터페이스. Node 간 통신 시 사용

- ip -c addr show lxc_health : Cilium health check용 veth 인터페이스 (컨테이너와 커널 네임스페이스 연결)

Cilium Health Endpoint 상태 확인

- cilium-dbg status --verbose : 각 노드별로 NodeIP, Health Endpoint IP와의 연결 상태 확인 (ICMP, HTTP 통신 가능 여부)

- cilium-dbg status --all-addresses : 현재 Cilium이 관리하는 모든 Pod IP 확인. Health Endpoint 포함

eBPF 기반 Conntrack / NAT 동작 확인

- cilium bpf ct list global : grep ICMP

- cilium bpf nat list : grep ICMP

Routing 상태 확인 (Native Routing + autoDirectNodeRoutes)

- ip -c route : grep 172.20

- endpointRoutes.enabled=true 설정 확인 : Pod별 lxc 인터페이스가 생성되어 있는지, 라우팅 테이블에 반영됐는지 확인

ip -c addr

for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c addr ; echo; done

ip -c addr show cilium_net

ip -c addr show cilium_host

for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c addr show cilium_net ; echo; done

for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c addr show cilium_host ; echo; done

7: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 36:c6:0f:07:67:95 brd ff:ff:ff:ff:ff:ff

inet6 fe80::34c6:fff:fe07:6795/64 scope link

valid_lft forever preferred_lft forever

8: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether be:07:e8:85:32:5d brd ff:ff:ff:ff:ff:ff

inet 172.20.2.22/32 scope global cilium_host

valid_lft forever preferred_lft forever

inet6 fe80::bc07:e8ff:fe85:325d/64 scope link

valid_lft forever preferred_lft forever

>> node : k8s-w1 <<

9: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 6e:d7:b2:f4:06:b9 brd ff:ff:ff:ff:ff:ff

inet6 fe80::6cd7:b2ff:fef4:6b9/64 scope link

valid_lft forever preferred_lft forever

>> node : k8s-w2 <<

7: cilium_net@cilium_host: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 4e:c7:88:fa:17:52 brd ff:ff:ff:ff:ff:ff

inet6 fe80::4cc7:88ff:fefa:1752/64 scope link

valid_lft forever preferred_lft forever

>> node : k8s-w1 <<

10: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 96:25:5f:0b:71:63 brd ff:ff:ff:ff:ff:ff

inet 172.20.0.22/32 scope global cilium_host

valid_lft forever preferred_lft forever

inet6 fe80::9425:5fff:fe0b:7163/64 scope link

valid_lft forever preferred_lft forever

>> node : k8s-w2 <<

8: cilium_host@cilium_net: <BROADCAST,MULTICAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 82:9e:4f:8b:de:26 brd ff:ff:ff:ff:ff:ff

inet 172.20.1.137/32 scope global cilium_host

valid_lft forever preferred_lft forever

inet6 fe80::809e:4fff:fe8b:de26/64 scope link

valid_lft forever preferred_lft forever

root@k8s-ctr:~# ip -c addr show lxc_health

for i in w1 w2 ; do echo ">> node : k8s-$i <<"; sshpass -p 'vagrant' ssh vagrant@k8s-$i ip -c addr show lxc_health ; echo; done

10: lxc_health@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 46:6e:8b:40:cb:15 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::446e:8bff:fe40:cb15/64 scope link

valid_lft forever preferred_lft forever

>> node : k8s-w1 <<

12: lxc_health@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 82:0d:ed:d1:6e:6c brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet6 fe80::800d:edff:fed1:6e6c/64 scope link

valid_lft forever preferred_lft forever

>> node : k8s-w2 <<

10: lxc_health@if9: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 6a:da:8e:3c:e8:f0 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::68da:8eff:fe3c:e8f0/64 scope link

valid_lft forever preferred_lft forever

Cilium CNI 통신 확인

노드 간 파드 -> 파드 통신 확인

root@k8s-ctr:~# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

curl-pod 1/1 Running 0 22m 172.20.2.28 k8s-ctr <none> <none>

webpod-86cbd476cd-h9z8j 1/1 Running 0 23m 172.20.0.112 k8s-w1 <none> <none>

webpod-86cbd476cd-xmjhs 1/1 Running 0 23m 172.20.1.166 k8s-w2 <none> <none>

root@k8s-ctr:~# kubectl get svc,ep webpod

Warning: v1 Endpoints is deprecated in v1.33+; use discovery.k8s.io/v1 EndpointSlice

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/webpod ClusterIP 10.96.55.34 <none> 80/TCP 4h6m

NAME ENDPOINTS AGE

endpoints/webpod 172.20.0.112:80,172.20.1.166:80 4h6m

root@k8s-ctr:~# WEBPOD1IP=172.20.0.112

# 사전 alias

# cilium 파드 이름

# export CILIUMPOD0=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-ctr -o jsonpath='{.items[0].metadata.name}')

# export CILIUMPOD1=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w1 -o jsonpath='{.items[0].metadata.name}')

# export CILIUMPOD2=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w2 -o jsonpath='{.items[0].metadata.name}')

# echo $CILIUMPOD0 $CILIUMPOD1 $CILIUMPOD2

# 단축키(alias) 지정

# alias c0="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- cilium"

# alias c1="kubectl exec -it $CILIUMPOD1 -n kube-system -c cilium-agent -- cilium"

# alias c2="kubectl exec -it $CILIUMPOD2 -n kube-system -c cilium-agent -- cilium"

# alias c0bpf="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- bpftool"

# alias c1bpf="kubectl exec -it $CILIUMPOD1 -n kube-system -c cilium-agent -- bpftool"

# alias c2bpf="kubectl exec -it $CILIUMPOD2 -n kube-system -c cilium-agent -- bpftool"

# BPF maps : 목적지 파드와 통신 시 어느곳으로 보내야 될지 확인할 수 있다

root@k8s-ctr:~# c0 map get cilium_ipcache

Key Value State Error

192.168.10.102/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync

172.20.2.28/32 identity=24093 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync

172.20.0.22/32 identity=6 encryptkey=0 tunnelendpoint=192.168.10.101 flags=<none> sync

172.20.1.246/32 identity=4 encryptkey=0 tunnelendpoint=192.168.10.102 flags=<none> sync

172.20.1.137/32 identity=6 encryptkey=0 tunnelendpoint=192.168.10.102 flags=<none> sync

10.0.2.15/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync

172.20.2.22/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync

192.168.10.100/32 identity=1 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync

172.20.1.192/32 identity=1587 encryptkey=0 tunnelendpoint=192.168.10.102 flags=<none> sync

172.20.0.112/32 identity=5632 encryptkey=0 tunnelendpoint=192.168.10.101 flags=<none> sync

192.168.10.101/32 identity=6 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync

172.20.0.204/32 identity=4 encryptkey=0 tunnelendpoint=192.168.10.101 flags=<none> sync

172.20.0.72/32 identity=1587 encryptkey=0 tunnelendpoint=192.168.10.101 flags=<none> sync

0.0.0.0/0 identity=2 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync

172.20.2.192/32 identity=4 encryptkey=0 tunnelendpoint=0.0.0.0 flags=<none> sync

172.20.1.166/32 identity=5632 encryptkey=0 tunnelendpoint=192.168.10.102 flags=<none> sync

root@k8s-ctr:~# c0 map get cilium_ipcache | grep $WEBPOD1IP

172.20.0.112/32 identity=5632 encryptkey=0 tunnelendpoint=192.168.10.101 flags=<none> sync

kubectl get ciliumendpoints -A

ip -c route | grep lxc

NAMESPACE NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

default curl-pod 24093 ready 172.20.2.28

default webpod-86cbd476cd-h9z8j 5632 ready 172.20.0.112

default webpod-86cbd476cd-xmjhs 5632 ready 172.20.1.166

kube-system coredns-674b8bbfcf-j4cd5 1587 ready 172.20.1.192

kube-system coredns-674b8bbfcf-wzlv2 1587 ready 172.20.0.72

172.20.2.28 dev lxc6914304c4f16 proto kernel scope link

172.20.2.192 dev lxc_health proto kernel scope link

root@k8s-ctr:~# LXC=lxc6914304c4f16

root@k8s-ctr:~# c0bpf net show | grep $LXC

lxc6914304c4f16(12) tcx/ingress cil_from_container prog_id 953 link_id 22

lxc6914304c4f16(12) tcx/egress cil_to_container prog_id 959 link_id 23

다른 노드 간 파드 -> 서비스 통신 확인

# k8s-w1

root@k8s-w1:~# ngrep -tW byline -d eth1 '' 'tcp port 80'

interface: eth1 (192.168.10.0/255.255.255.0)

filter: ( tcp port 80 ) and ((ip || ip6) || (vlan && (ip || ip6)))

root@k8s-w2:~# ngrep -tW byline -d eth1 '' 'tcp port 80'

interface: eth1 (192.168.10.0/255.255.255.0)

filter: ( tcp port 80 ) and ((ip || ip6) || (vlan && (ip || ip6)))

# [k8s-ctr] curl-pod 에서 curl 요청 시도

root@k8s-ctr:~# kubectl exec -it curl-pod -- curl $WEBPOD1IP

Hostname: webpod-86cbd476cd-h9z8j

IP: 127.0.0.1

IP: ::1

IP: 172.20.0.112

IP: fe80::20a8:85ff:fec5:2374

RemoteAddr: 172.20.2.28:39938

GET / HTTP/1.1

Host: 172.20.0.112

User-Agent: curl/8.14.1

Accept: */*

# k8s-w1 터미널에서 출력 확인

####

T 2025/07/20 03:28:08.258282 172.20.2.28:39938 -> 172.20.0.112:80 [AP] #4

GET / HTTP/1.1.

Host: 172.20.0.112.

User-Agent: curl/8.14.1.

Accept: */*.

.

##

T 2025/07/20 03:28:08.267870 172.20.0.112:80 -> 172.20.2.28:39938 [AP] #6

HTTP/1.1 200 OK.

Date: Sat, 19 Jul 2025 18:28:08 GMT.

Content-Length: 209.

Content-Type: text/plain; charset=utf-8.

.

Hostname: webpod-86cbd476cd-h9z8j

IP: 127.0.0.1

IP: ::1

IP: 172.20.0.112

IP: fe80::20a8:85ff:fec5:2374

RemoteAddr: 172.20.2.28:39938

GET / HTTP/1.1.

Host: 172.20.0.112.

User-Agent: curl/8.14.1.

Accept: */*.

.'스터디 > Cilium' 카테고리의 다른 글

| [Cilium] IPAM (3) | 2025.08.03 |

|---|---|

| [Cilium] Prometheus, Grafana을 활용한 Cilium 모니터링 (6) | 2025.07.27 |

| [Cilium] Cilium Observability - Hubble 설치 및 CiliumNetworkPolicy 적용 (3) | 2025.07.27 |

| [Cilium] Cilium CNI과 eBPF 소개 (5) | 2025.07.20 |

| [Cilium] 사전 실습 환경 구성, Flannel CNI 설치 (3) | 2025.07.19 |