Cloudnet Cilium 3주차 스터디를 진행하며 정리한 글입니다.

CoreDNS

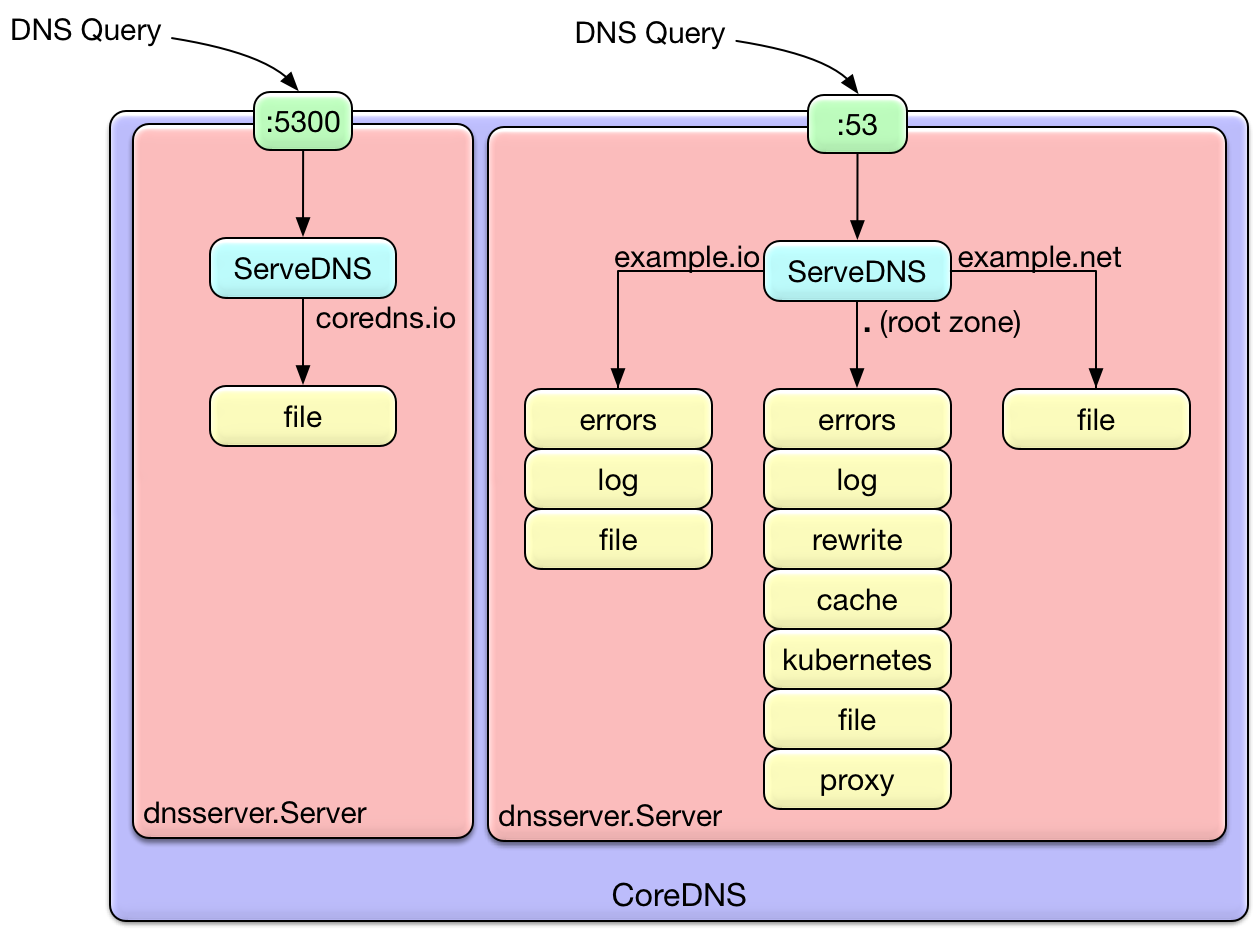

CoreDNS는 Kubernetes의 기본 DNS 서비스로, 클러스터 내부에서 도메인 이름을 IP 주소로 변환(DNS resolving)하는 역할을 합니다.

kube-dns라는 이름의 Service (ClusterIP) 로 노출되어, 파드들은 /etc/resolv.conf에 설정된 주소(10.96.0.10 등)를 통해 DNS 질의 수행합니다.

root@k8s-ctr:~# kubectl describe cm -n kube-system coredns

...

Corefile:

----

.:53 { # 모든 도메인 요청을 53포트에서 수신

errors # DNS 응답 중 에러가 발생할 경우 로그 출력

health { # health 엔드포인트를 제공하여 상태 확인 가능

lameduck 5s # 종료 시 5초간 lameduck 모드로 트래픽을 점차 줄이며 종료

}

ready # ready 엔드포인트 제공, 8181 포트의 HTTP 엔드포인트가, 모든 플러그인이 준비되었다는 신호를 보내면 200 OK 를 반환

kubernetes cluster.local in-addr.arpa ip6.arpa { # Kubernetes DNS 플러그인 설정(클러스터 내부 도메인 처리), cluster.local: 클러스터 도메인

pods insecure # 파드 IP로 DNS 조회 허용 (보안 없음)

fallthrough in-addr.arpa ip6.arpa # 해당 도메인에서 결과 없으면 다음 플러그인으로 전달

ttl 30 # 캐시 타임 (30초)

}

prometheus :9153 # Prometheus metrics 수집 가능

forward . /etc/resolv.conf { # CoreDNS가 모르는 도메인은 지정된 업스트림(보통 외부 DNS)으로 전달, .: 모든 쿼리

max_concurrent 1000 # 병렬 포워딩 최대 1000개

}

cache 30 { # DNS 응답 캐시 기능, 기본 캐시 TTL 30초

disable success cluster.local # 성공 응답 캐시 안 함 (cluster.local 도메인)

disable denial cluster.local # NXDOMAIN 응답도 캐시 안 함

}

loop # 간단한 전달 루프(loop)를 감지하고, 루프가 발견되면 CoreDNS 프로세스를 중단(halt).

reload # Corefile 이 변경되었을 때 자동으로 재적용, 컨피그맵 설정을 변경한 후에 변경 사항이 적용되기 위하여 약 2분정도 소요.

loadbalance # 응답에 대하여 A, AAAA, MX 레코드의 순서를 무작위로 선정하는 라운드-로빈 DNS 로드밸런서.

}

#

root@k8s-ctr:~# cat /etc/resolv.conf

nameserver 127.0.0.53

options edns0 trust-ad

search .

root@k8s-ctr:~# resolvectl

Global

Protocols: -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

resolv.conf mode: stub

Link 2 (eth0)

Current Scopes: DNS

Protocols: +DefaultRoute -LLMNR -mDNS -DNSOverTLS DNSSEC=no/unsupported

Current DNS Server: 10.0.2.3

DNS Servers: 10.0.2.3 fd17:625c:f037:2::3

Link 3 (eth1)

Current Scopes: none

# 파드의 DNS 설정 정보 확인

root@k8s-ctr:~# kubectl exec -it curl-pod -- cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local

nameserver 10.96.0.10

options ndots:5

# 파드에서 DNS 질의 확인

NodeLocal DNSCache

NodeLocal DNSCache는 노드 로컬에 DNS 캐시 서버(CoreDNS)를 배치하여 DNS 성능을 향상시키는 기능입니다.

각 노드에 DaemonSet 형태로 CoreDNS가 실행되며, 파드들은 로컬 캐시 서버에 DNS 요청합니다.

장점:

- iptables DNAT 및 conntrack 회피 → 낮은 지연 시간, 안정성 향상

- CoreDNS 장애 시에도 노드 단위의 캐시가 응답을 제공

- Prometheus를 통한 노드 단위 DNS 메트릭 수집 가능

- 파드에서는 여전히 nameserver가 10.96.0.10 등 CoreDNS 서비스 IP로 설정되어 있으나, iptables로 NodeLocal DNSCache로 리디렉션

NodeLocal DNSCache 설치

NodeLocal DSCache의 로컬 청취 IP 주소는 클러스터의 기존 IP와 충돌하지 않도록 보장할 수 있는 모든 주소일 수 있습니다.

예를 들어, IPv4의 '링크-로컬' 범위 '169.254.0.0/16' 또는 IPv6 'fd00::/8'의 '유니크 로컬 주소' 범위와 같은 로컬 범위의 주소를 사용하는 것이 좋습니다.

root@k8s-ctr:~# wget https://github.com/kubernetes/kubernetes/raw/master/cluster/addons/dns/nodelocaldns/nodelocaldns.yamlroot@k8s-ctr:~# wget https://github.com/kubernetes/kubernetes/raw/master/cluster/addons/dns/nodelocaldns/nodelocaldns.yaml

# kubedns 는 coredns 서비스의 ClusterIP를 변수 지정

root@k8s-ctr:~# kubedns=`kubectl get svc kube-dns -n kube-system -o jsonpath={.spec.clusterIP}`

root@k8s-ctr:~# domain='cluster.local' ## default 값

root@k8s-ctr:~# localdns='169.254.20.10' ## default 값

root@k8s-ctr:~# echo $kubedns $domain $localdns

# iptables 모드 사용 중으로 아래 명령어 수행

sed -i "s/__PILLAR__LOCAL__DNS__/$localdns/g; s/__PILLAR__DNS__DOMAIN__/$domain/g; s/__PILLAR__DNS__SERVER__/$kubedns/g" nodelocaldns.yaml

# nodelocaldns 설치

kubectl apply -f nodelocaldns.yaml

root@k8s-ctr:~# kubectl get pod -n kube-system -l k8s-app=node-local-dns -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

node-local-dns-jxg5j 0/1 ContainerCreating 0 15s 192.168.10.101 k8s-w1 <none> <none>

node-local-dns-vqjcw 0/1 ContainerCreating 0 15s 192.168.10.100 k8s-ctr <none> <none>

# 변경점

1c1

< # Generated by iptables-save v1.8.10 (nf_tables) on Sun Aug 3 03:30:16 2025

---

> # Generated by iptables-save v1.8.10 (nf_tables) on Sun Aug 3 03:34:03 2025

19,20c19,20

< # Completed on Sun Aug 3 03:30:16 2025

< # Generated by iptables-save v1.8.10 (nf_tables) on Sun Aug 3 03:30:16 2025

---

> # Completed on Sun Aug 3 03:34:03 2025

> # Generated by iptables-save v1.8.10 (nf_tables) on Sun Aug 3 03:34:03 2025

25a26,29

> -A PREROUTING -d 10.96.0.10/32 -p udp -m udp --dport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A PREROUTING -d 10.96.0.10/32 -p tcp -m tcp --dport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A PREROUTING -d 169.254.20.10/32 -p udp -m udp --dport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A PREROUTING -d 169.254.20.10/32 -p tcp -m tcp --dport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

26a31,42

> -A OUTPUT -s 10.96.0.10/32 -p tcp -m tcp --sport 8080 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -d 10.96.0.10/32 -p tcp -m tcp --dport 8080 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -d 10.96.0.10/32 -p udp -m udp --dport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -d 10.96.0.10/32 -p tcp -m tcp --dport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -s 10.96.0.10/32 -p udp -m udp --sport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -s 10.96.0.10/32 -p tcp -m tcp --sport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -s 169.254.20.10/32 -p tcp -m tcp --sport 8080 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -d 169.254.20.10/32 -p tcp -m tcp --dport 8080 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -d 169.254.20.10/32 -p udp -m udp --dport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -d 169.254.20.10/32 -p tcp -m tcp --dport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -s 169.254.20.10/32 -p udp -m udp --sport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

> -A OUTPUT -s 169.254.20.10/32 -p tcp -m tcp --sport 53 -m comment --comment "NodeLocal DNS Cache: skip conntrack" -j NOTRACK

38,39c54,55

< # Completed on Sun Aug 3 03:30:16 2025

< # Generated by iptables-save v1.8.10 (nf_tables) on Sun Aug 3 03:30:16 2025

---

> # Completed on Sun Aug 3 03:34:03 2025

> # Generated by iptables-save v1.8.10 (nf_tables) on Sun Aug 3 03:34:03 2025

41c57

< :INPUT ACCEPT [2529880:1664332676]

---

> :INPUT ACCEPT [2587216:1710816416]

43c59

< :OUTPUT ACCEPT [2495975:697327307]

---

> :OUTPUT ACCEPT [2549811:710187168]

54a71,74

> -A INPUT -d 10.96.0.10/32 -p udp -m udp --dport 53 -m comment --comment "NodeLocal DNS Cache: allow DNS traffic" -j ACCEPT

> -A INPUT -d 10.96.0.10/32 -p tcp -m tcp --dport 53 -m comment --comment "NodeLocal DNS Cache: allow DNS traffic" -j ACCEPT

> -A INPUT -d 169.254.20.10/32 -p udp -m udp --dport 53 -m comment --comment "NodeLocal DNS Cache: allow DNS traffic" -j ACCEPT

> -A INPUT -d 169.254.20.10/32 -p tcp -m tcp --dport 53 -m comment --comment "NodeLocal DNS Cache: allow DNS traffic" -j ACCEPT

64a85,88

> -A OUTPUT -s 10.96.0.10/32 -p udp -m udp --sport 53 -m comment --comment "NodeLocal DNS Cache: allow DNS traffic" -j ACCEPT

> -A OUTPUT -s 10.96.0.10/32 -p tcp -m tcp --sport 53 -m comment --comment "NodeLocal DNS Cache: allow DNS traffic" -j ACCEPT

> -A OUTPUT -s 169.254.20.10/32 -p udp -m udp --sport 53 -m comment --comment "NodeLocal DNS Cache: allow DNS traffic" -j ACCEPT

> -A OUTPUT -s 169.254.20.10/32 -p tcp -m tcp --sport 53 -m comment --comment "NodeLocal DNS Cache: allow DNS traffic" -j ACCEPT

84,85c108,109

< # Completed on Sun Aug 3 03:30:16 2025

< # Generated by iptables-save v1.8.10 (nf_tables) on Sun Aug 3 03:30:16 2025

---

> # Completed on Sun Aug 3 03:34:03 2025

> # Generated by iptables-save v1.8.10 (nf_tables) on Sun Aug 3 03:34:03 2025

104a129

> :KUBE-SEP-7WUSMAWELYMGPE6K - [0:0]

109a135,136

> :KUBE-SEP-GLDEEPVHEJVFFXWX - [0:0]

> :KUBE-SEP-GOO6UKDPSLRGQLZV - [0:0]

111a139

> :KUBE-SEP-QCNNMMMXLS477KT5 - [0:0]

117a146

> :KUBE-SVC-BRK3P4PPQWCLKOAN - [0:0]

121a151

> :KUBE-SVC-FXR4M2CWOGAZGGYD - [0:0]

154a185,186

> -A KUBE-SEP-7WUSMAWELYMGPE6K -s 172.20.1.99/32 -m comment --comment "kube-system/kube-dns-upstream:dns-tcp" -j KUBE-MARK-MASQ

> -A KUBE-SEP-7WUSMAWELYMGPE6K -p tcp -m comment --comment "kube-system/kube-dns-upstream:dns-tcp" -m tcp -j DNAT --to-destination 172.20.1.99:53

164a197,200

> -A KUBE-SEP-GLDEEPVHEJVFFXWX -s 172.20.0.23/32 -m comment --comment "kube-system/kube-dns-upstream:dns-tcp" -j KUBE-MARK-MASQ

> -A KUBE-SEP-GLDEEPVHEJVFFXWX -p tcp -m comment --comment "kube-system/kube-dns-upstream:dns-tcp" -m tcp -j DNAT --to-destination 172.20.0.23:53

> -A KUBE-SEP-GOO6UKDPSLRGQLZV -s 172.20.0.23/32 -m comment --comment "kube-system/kube-dns-upstream:dns" -j KUBE-MARK-MASQ

> -A KUBE-SEP-GOO6UKDPSLRGQLZV -p udp -m comment --comment "kube-system/kube-dns-upstream:dns" -m udp -j DNAT --to-destination 172.20.0.23:53

168a205,206

> -A KUBE-SEP-QCNNMMMXLS477KT5 -s 172.20.1.99/32 -m comment --comment "kube-system/kube-dns-upstream:dns" -j KUBE-MARK-MASQ

> -A KUBE-SEP-QCNNMMMXLS477KT5 -p udp -m comment --comment "kube-system/kube-dns-upstream:dns" -m udp -j DNAT --to-destination 172.20.1.99:53

182a221

> -A KUBE-SERVICES -d 10.96.170.42/32 -p tcp -m comment --comment "kube-system/kube-dns-upstream:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-SVC-BRK3P4PPQWCLKOAN

186a226

> -A KUBE-SERVICES -d 10.96.170.42/32 -p udp -m comment --comment "kube-system/kube-dns-upstream:dns cluster IP" -m udp --dport 53 -j KUBE-SVC-FXR4M2CWOGAZGGYD

189a230,232

> -A KUBE-SVC-BRK3P4PPQWCLKOAN ! -s 10.244.0.0/16 -d 10.96.170.42/32 -p tcp -m comment --comment "kube-system/kube-dns-upstream:dns-tcp cluster IP" -m tcp --dport 53 -j KUBE-MARK-MASQ

> -A KUBE-SVC-BRK3P4PPQWCLKOAN -m comment --comment "kube-system/kube-dns-upstream:dns-tcp -> 172.20.0.23:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-GLDEEPVHEJVFFXWX

> -A KUBE-SVC-BRK3P4PPQWCLKOAN -m comment --comment "kube-system/kube-dns-upstream:dns-tcp -> 172.20.1.99:53" -j KUBE-SEP-7WUSMAWELYMGPE6K

199a243,245

> -A KUBE-SVC-FXR4M2CWOGAZGGYD ! -s 10.244.0.0/16 -d 10.96.170.42/32 -p udp -m comment --comment "kube-system/kube-dns-upstream:dns cluster IP" -m udp --dport 53 -j KUBE-MARK-MASQ

> -A KUBE-SVC-FXR4M2CWOGAZGGYD -m comment --comment "kube-system/kube-dns-upstream:dns -> 172.20.0.23:53" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-GOO6UKDPSLRGQLZV

> -A KUBE-SVC-FXR4M2CWOGAZGGYD -m comment --comment "kube-system/kube-dns-upstream:dns -> 172.20.1.99:53" -j KUBE-SEP-QCNNMMMXLS477KT5

213c259

< # Completed on Sun Aug 3 03:30:16 2025

---

> # Completed on Sun Aug 3 03:34:03 2025

NodeLocalDns + Cilium Local Redirect Policy

Cilium의 Local Redirect Policy는 특정 Kubernetes Service 트래픽을 노드 내 백엔드 파드로 직접 리디렉션하도록 해주는 기능입니다.

CiliumLocalRedirectPolicy는 CustomResourceDefinition으로 구성되어 있습니다.

IP 주소와 Port/Protocol tuple 또는 Kubernetes Service 로 향하는 포드 트래픽을 eBPF를 사용하여 노드 내 백엔드 포드로 로컬로 리디렉션할 수 있도록 하는 Cilium의 로컬 리디렉션 정책을 구성합니다.

장점:

- iptables 없이도 리디렉션 수행 → eBPF 기반 처리로 더 빠르고 유연

- Cilium 기반 클러스터에서 추천되는 방식

- 로컬 리디렉션이므로 네트워크 hop 수 감소 → DNS 응답 지연 줄어듦

root@k8s-ctr:~# helm upgrade cilium cilium/cilium --namespace kube-system --reuse-values --set localRedirectPolicy=true --version 1.17.6

Release "cilium" has been upgraded. Happy Helming!

NAME: cilium

LAST DEPLOYED: Sun Aug 3 03:40:56 2025

NAMESPACE: kube-system

STATUS: deployed

REVISION: 4

TEST SUITE: None

NOTES:

You have successfully installed Cilium with Hubble Relay and Hubble UI.

Your release version is 1.17.6.

For any further help, visit https://docs.cilium.io/en/v1.17/gettinghelp

root@k8s-ctr:~# kubectl rollout restart deploy cilium-operator -n kube-system

kubectl rollout restart ds cilium -n kube-system

deployment.apps/cilium-operator restarted

daemonset.apps/cilium restarted

root@k8s-ctr:~#

wget https://raw.githubusercontent.com/cilium/cilium/1.17.6/examples/kubernetes-local-redirect/node-local-dns.yaml

root@k8s-ctr:~# kubedns=$(kubectl get svc kube-dns -n kube-system -o jsonpath={.spec.clusterIP})

sed -i "s/__PILLAR__DNS__SERVER__/$kubedns/g;" node-local-dns.yaml

vi -d nodelocaldns.yaml node-local-dns.yaml

## before

args: [ "-localip", "169.254.20.10,10.96.0.10", "-conf", "/etc/Corefile", "-upstreamsvc", "kube-dns-upstream" ]

## after

args: [ "-localip", "169.254.20.10,10.96.0.10", "-conf", "/etc/Corefile", "-upstreamsvc", "kube-dns-upstream", "-skipteardown=true", "-setupinterface=false", "-setupiptables=false" ]

# 배포

# Modify Node-local DNS cache’s deployment yaml to pass these additional arguments to node-cache:

## -skipteardown=true, -setupinterface=false, and -setupiptables=false.

root@k8s-ctr:~# kubectl apply -f node-local-dns.yaml

serviceaccount/node-local-dns configured

service/kube-dns-upstream configured

configmap/node-local-dns configured

daemonset.apps/node-local-dns configured

root@k8s-ctr:~# kubectl -n kube-system rollout restart ds node-local-dns

daemonset.apps/node-local-dns restarted

# CRD 설치

root@k8s-ctr:~# wget https://raw.githubusercontent.com/cilium/cilium/1.17.6/examples/kubernetes-local-redirect/node-local-dns-lrp.yaml

root@k8s-ctr:~# cat node-local-dns-lrp.yaml | yq

{

"apiVersion": "cilium.io/v2",

"kind": "CiliumLocalRedirectPolicy",

"metadata": {

"name": "nodelocaldns",

"namespace": "kube-system"

},

"spec": {

"redirectFrontend": {

"serviceMatcher": {

"serviceName": "kube-dns",

"namespace": "kube-system"

}

},

"redirectBackend": {

"localEndpointSelector": {

"matchLabels": {

"k8s-app": "node-local-dns"

}

},

"toPorts": [

{

"port": "53",

"name": "dns",

"protocol": "UDP"

},

{

"port": "53",

"name": "dns-tcp",

"protocol": "TCP"

}

]

}

}

}

root@k8s-ctr:~# kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.17.6/examples/kubernetes-local-redirect/node-local-dns-lrp.yaml

ciliumlocalredirectpolicy.cilium.io/nodelocaldns created

root@k8s-ctr:~# kubectl get CiliumLocalRedirectPolicy -A

NAMESPACE NAME AGE

kube-system nodelocaldns 15s

root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -c cilium-agent -- cilium-dbg lrp list

LRP namespace LRP name FrontendType Matching Service

kube-system nodelocaldns clusterIP + all svc ports kube-system/kube-dns

| 10.96.0.10:9153/TCP ->

| 10.96.0.10:53/UDP -> 172.20.0.247:53(kube-system/node-local-dns-4sh8s),

| 10.96.0.10:53/TCP -> 172.20.0.247:53(kube-system/node-local-dns-4sh8s),

root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -c cilium-agent -- cilium-dbg service list | grep LocalRedirect

16 10.96.0.10:53/UDP LocalRedirect 1 => 172.20.0.247:53/UDP (active)

17 10.96.0.10:53/TCP LocalRedirect 1 => 172.20.0.247:53/TCP (active)

# 호출 테스트

root@k8s-ctr:~# kubectl exec -it curl-pod -- nslookup www.google.com

;; Got recursion not available from 10.96.0.10

;; Got recursion not available from 10.96.0.10

;; Got recursion not available from 10.96.0.10

Server: 10.96.0.10

Address: 10.96.0.10#53

Non-authoritative answer:

Name: www.google.com

Address: 142.250.197.228

Name: www.google.com

Address: 2404:6800:4005:825::2004

root@k8s-ctr:~# kubectl -n kube-system logs -l k8s-app=node-local-dns -f

}

cluster.local.:53 on 0.0.0.0

in-addr.arpa.:53 on 0.0.0.0

ip6.arpa.:53 on 0.0.0.0

.:53 on 0.0.0.0

[INFO] plugin/reload: Running configuration MD5 = a6f22391ff908a19377a86ff31bf739d

CoreDNS-1.6.7

linux/arm64, go1.11.13,

[INFO] 127.0.0.1:33695 - 56320 "HINFO IN 5247980365557040740.177345076339220906.cluster.local. udp 70 false 512" NXDOMAIN qr,aa,rd 163 0.0082645s

[INFO] 127.0.0.1:48471 - 54694 "HINFO IN 1543998841170573317.4902409132048159698. udp 57 false 512" NXDOMAIN qr,rd,ra 132 0.01122275s

}

cluster.local.:53 on 0.0.0.0

in-addr.arpa.:53 on 0.0.0.0

ip6.arpa.:53 on 0.0.0.0

.:53 on 0.0.0.0

[INFO] plugin/reload: Running configuration MD5 = a6f22391ff908a19377a86ff31bf739d

CoreDNS-1.6.7

linux/arm64, go1.11.13,

[INFO] 127.0.0.1:45957 - 58048 "HINFO IN 1044367524764233318.210954031071678938.cluster.local. udp 70 false 512" NXDOMAIN qr,aa,rd 163 0.002819667s

[INFO] 127.0.0.1:44288 - 61237 "HINFO IN 9085978463054953385.7169946617125649978. udp 57 false 512" NXDOMAIN qr,rd,ra 132 0.109506291s

[INFO] 172.20.1.137:39223 - 10342 "A IN www.google.com.default.svc.cluster.local. udp 58 false 512" NXDOMAIN qr,aa,rd 151 0.007179209s

[INFO] 172.20.1.137:34028 - 2778 "A IN www.google.com.svc.cluster.local. udp 50 false 512" NXDOMAIN qr,aa,rd 143 0.007172541s

[INFO] 172.20.1.137:54285 - 60186 "A IN www.google.com.cluster.local. udp 46 false 512" NXDOMAIN qr,aa,rd 139 0.001394917s

[INFO] 172.20.1.137:43802 - 17683 "A IN www.google.com. udp 32 false 512" NOERROR qr,rd,ra 62 0.01623825s

[INFO] 172.20.1.137:34724 - 40368 "AAAA IN www.google.com. udp 32 false 512" NOERROR qr,rd,ra 74 0.007036042s

위 실습을 통해 DNS 질의가 Cilium의 Local Redirect Policy를 통해 노드 로컬에 존재하는 node-local-dns 파드로 전달되어 처리되었고, 캐시 동작도 확인되며 전체 흐름이 정상적으로 작동하는것을 확인할 수 있었습니다.

'스터디 > Cilium' 카테고리의 다른 글

| [Cilium] Overlay Network (Encapsulation) mode (0) | 2025.08.10 |

|---|---|

| [Cilium] Native Routing Mode (1) | 2025.08.10 |

| [Cilium] eBPF 기반 NAT Masquerading 동작 및 ip-masq-agent를 통한 SNAT 제외 확인하기 (3) | 2025.08.03 |

| [Cilium] Cilium Native-Routing 모드로 Pod 통신 확인하기 (1) | 2025.08.03 |

| [Cilium] IPAM (3) | 2025.08.03 |