Cloudnet Cilium 8주차 스터디를 진행하며 정리한 글입니다.

Clilium Security

쿠버네티스 기본 네트워크 정책(NetworkPolicy)은 트래픽을 제한할 수 있습니다.

하지만 여기에는 몇 가지 한계가 있습니다.

- L3/L4까지만 지원 → IP와 포트 기반 제어에 머무름

- Identity 개념 없음 → 파드가 재시작되어 IP가 바뀌면 정책 관리가 번거로움

- 애플리케이션 레벨(L7) 제어 불가 → HTTP 메서드나 경로 단위의 보안은 불가능

이러한 빈틈을 메워주는 것이 바로 Cilium Security입니다.

Cilium은 Identity 기반 보안을 중심으로, L3 → L4 → L7까지 계층적으로 보안을 확장할 수 있습니다.

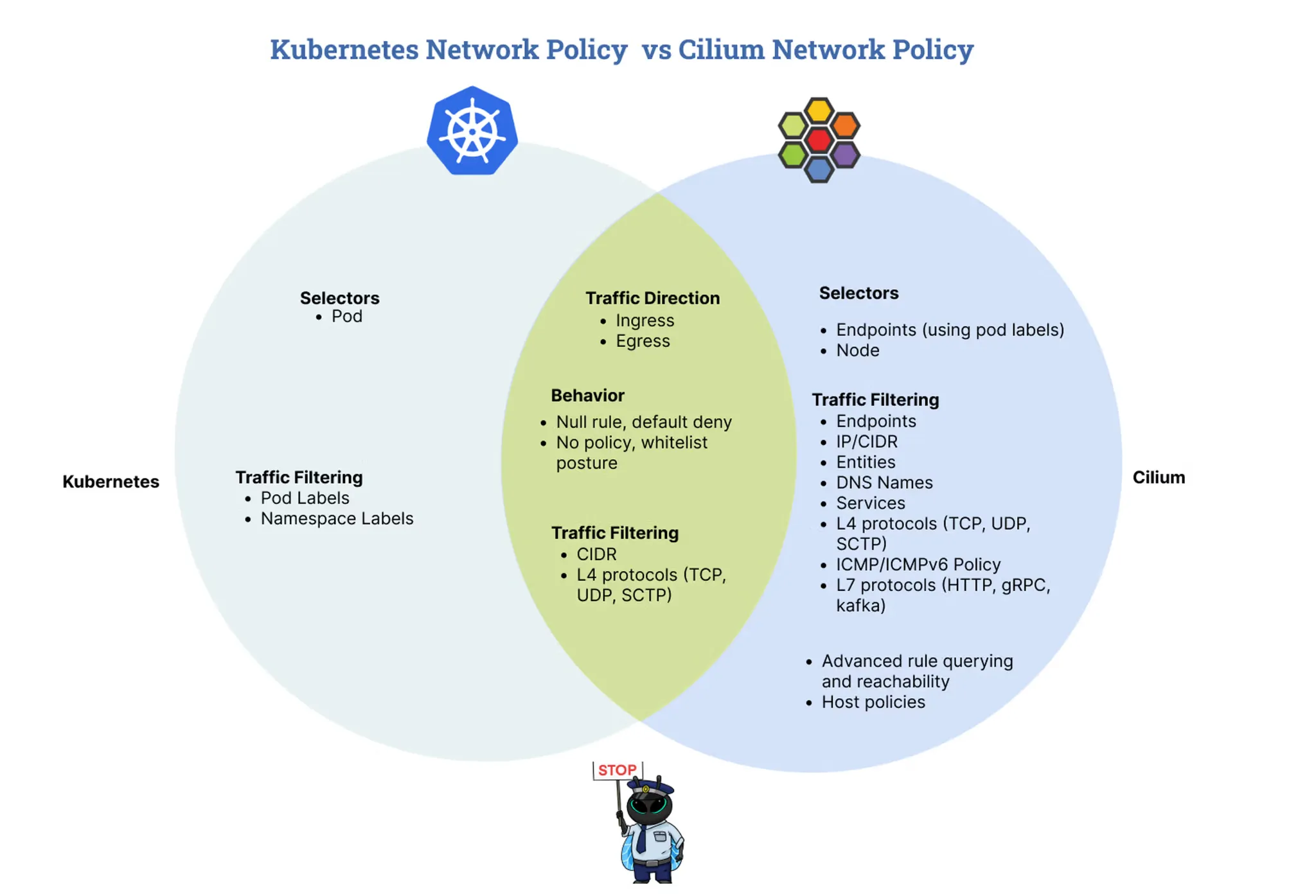

Cilium vs Kubernetes NetworkPolicy

| 구분 | Kubernetes NetworkPolicy | Cilium NetworkPolicy |

| 지원 레벨 | L3, L4 | L3, L4, L7 |

| 기준 | IP, Namespace, Label | Identity(Label 기반) |

| 정책 범위 | 네임스페이스 단위 | 네임스페이스 + 클러스터 전역 (CCNP) |

| 특징 | 기본적인 Pod 간 통신 제한 | Envoy 프록시를 통한 L7 제어, Identity 기반 정책 |

Cilium을 사용하면 IP 중심 -> Identity 중심으로 보안을 전환할 수 있고, 추가로 L7까지 제어할 수 있다는 점에서 확장성이 훨씬 뛰어납니다.

Identity 기반 보안 (Layer 3)

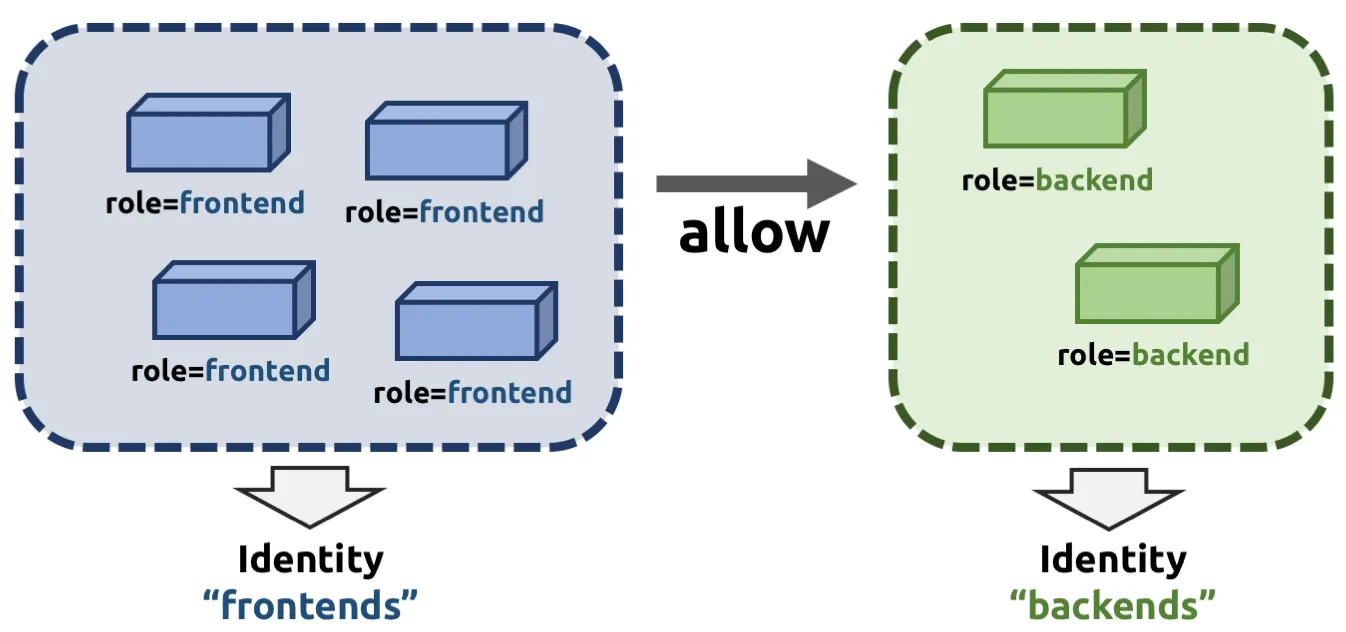

Identity

Cilium에서 말하는 Identity는 파드나 컨테이너를 구분하는 고유한 이름표 같은 개념입니다.

이 이름표는 레이블(Label)에서 파생되며, 같은 레이블을 가진 파드들은 같은 Identity를 공유합니다.

예를 들어,

- role=frontend 라벨을 가진 파드들은 모두 같은 Identity

- role=backend 라벨을 가진 파드들은 또 다른 Identity

이렇게 그룹화됩니다. Identity가 만들어지는 과정은 다음과 같습니다.

- 파드가 생성되면 Cilium이 이를 감지하고 해당 파드를 엔드포인트로 등록합니다.

- 등록된 엔드포인트에 붙은 레이블을 확인해서, 그 레이블 조합에 맞는 Identity 번호를 부여합니다.

- 파드의 레이블이 바뀌면 → Cilium이 이를 감지 → 엔드포인트 상태를 waiting-for-identity로 전환 → 새로운 Identity를 다시 할당합니다.

즉, 레이블이 Identity의 기준점이고, 따라서 정책도 레이블 중심으로 자동 재적용됩니다.

따라서, 현재 배포 되어있는 라벨을 변경하면 identity 값이 즉시 변경된 것을 알 수 있습니다. 다만, 대규모 클러스터에서 자주 labels를 변경하면 identity 할당이 빈번해져 성능 저하가 발생할 수 있으므로, identity-relevant labels를 제한하는 것이 권장된다고 합니다.

> kubectl get ciliumendpoints.cilium.io -n kube-system

NAME SECURITY IDENTITY ENDPOINT STATE IPV4 IPV6

coredns-674b8bbfcf-8qhhb 18821 ready 10.244.0.181

coredns-674b8bbfcf-xfbpx 18821 ready 10.244.0.61

hubble-relay-fdd49b976-vkxqt 16039 ready 10.244.2.66

hubble-ui-655f947f96-2dz8s 5126 ready 10.244.0.148

metrics-server-5dd7b49d79-cfgcw 8383 ready 10.244.1.107

> kubectl get ciliumidentities.cilium.io

NAME NAMESPACE AGE

13988 default 7d13h

16039 kube-system 7d13h

18821 kube-system 7d13h

1980 default 7d13h

25791 local-path-storage 7d13h

44434 cilium-monitoring 7d13h

50195 cilium-monitoring 7d13h

5126 kube-system 7d13h

8383 kube-system 7d13h

# coredns deployment 에 spec.template.metadata.labels에 아래 추가 시 반영 확인

# coredns가 롤링되면서 반영이 됨

> kubectl edit deploy -n kube-system coredns

...

template:

metadata:

labels:

app: testing # 추가

k8s-app: kube-dns

> kubectl exec -it -n kube-system ds/cilium -- cilium identity list

ID LABELS

1 reserved:host

reserved:kube-apiserver

2 reserved:world

3 reserved:unmanaged

4 reserved:health

5 reserved:init

6 reserved:remote-node

7 reserved:kube-apiserver

reserved:remote-node

8 reserved:ingress

9 reserved:world-ipv4

10 reserved:world-ipv6

1980 k8s:app=iperf3-client

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

5126 k8s:app.kubernetes.io/name=hubble-ui

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

8383 k8s:app.kubernetes.io/instance=metrics-server

k8s:app.kubernetes.io/name=metrics-server

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

13988 k8s:app=iperf3-server

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

16039 k8s:app.kubernetes.io/name=hubble-relay

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-relay

18821 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

25791 k8s:app=local-path-provisioner

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=local-path-storage

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=local-path-provisioner-service-account

k8s:io.kubernetes.pod.namespace=local-path-storage

44434 k8s:app=grafana

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=cilium-monitoring

47953 k8s:app=testing

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

50195 k8s:app=prometheus

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=prometheus-k8s

k8s:io.kubernetes.pod.namespace=cilium-monitoring

57638 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

k8s:study=8w

Identity 관리

ID는 전체 클러스터에서 유효합니다. 즉, 여러 클러스터 노드에서 여러 개의 포드 또는 컨테이너가 시작되더라도 ID 관련 레이블을 공유하는 경우 모든 포드 또는 컨테이너가 단일 ID를 확인하고 공유합니다.

엔드포인트 ID를 확인하는 작업은 분산 키-값 저장소를 통해 수행됩니다.

포트 제한 (Layer 4)

L4 정책은 인바운드·아웃바운드 포트를 제한합니다.

예를 들어 frontend는 443만 나가고, backend는 443에서만 받을 수 있게 할 수 있습니다.

- 정책이 없을 때 → 모든 포트 허용

- 첫 정책이 로드되면 → 화이트리스트 모드 전환

- L4 규칙이 한 번이라도 붙으면 해당 포드는 지정되지 않은 모든 포트 접근이 자동 차단 -> 운영자 입장에서는 실수로 허용 안 한 포트가 열리는 상황을 예방할 수 있는 구조

애플리케이션 프로토콜 수준 제어 (Layer 7)

Cilium은 Envoy 프록시를 통해 L7까지 제어할 수 있습니다.

특징적인 점은, 단순히 패킷을 드롭하는 게 아니라 HTTP 403 같은 거부 응답을 반환한다는 것입니다.

덕분에 운영자는 정책 때문에 막힌 건지, 네트워크 장애인지 쉽게 구분할 수 있습니다.

Cilium의 DNS 기반 정책은 DNS-IP 매핑 추적과 같은 복잡한 측면을 관리하는 동시에 액세스 제어를 쉽게 지정할 수 있는 메커니즘을 제공합니다.

- DNS 기반 정책을 사용하여 클러스터 외부 서비스에 대한 이탈 액세스 제어

- 패턴(또는 와일드카드)을 사용하여 DNS 도메인 하위 집합을 허용 목록에 추가

- 외부 서비스 접근 제한을 위한 DNS, 포트 및 L7 규칙 결합

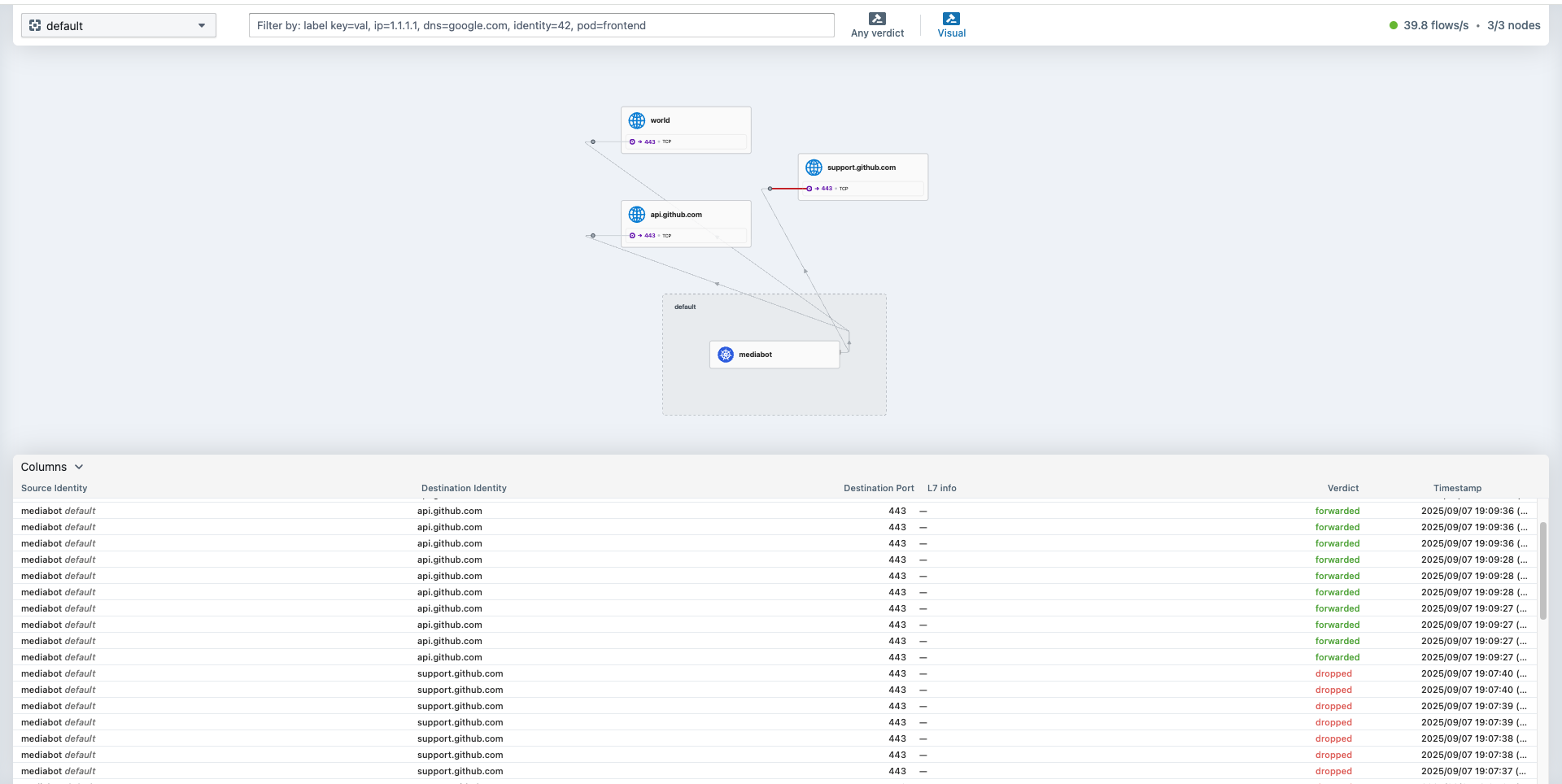

실습 1) DNS Egress 적용 - mediabot 파드가 api.github.com에만 액세스하도록 허용

실습을 위한 예제 어플리케이션을 배포하겠습니다.

root@k8s-ctr:~# cat << EOF > dns-sw-app.yaml

apiVersion: v1

kind: Pod

metadata:

name: mediabot

labels:

org: empire

class: mediabot

app: mediabot

spec:

containers:

- name: mediabot

image: quay.io/cilium/json-mock:v1.3.8@sha256:5aad04835eda9025fe4561ad31be77fd55309af8158ca8663a72f6abb78c2603

EOF

root@k8s-ctr:~# kubectl apply -f dns-sw-app.yaml

root@k8s-ctr:~# kubectl wait pod/mediabot --for=condition=Ready

root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -- cilium identity list

ID LABELS

1 reserved:host

reserved:kube-apiserver

2 reserved:world

3 reserved:unmanaged

4 reserved:health

5 reserved:init

6 reserved:remote-node

7 reserved:kube-apiserver

reserved:remote-node

8 reserved:ingress

9 reserved:world-ipv4

10 reserved:world-ipv6

1980 k8s:app=iperf3-client

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

5126 k8s:app.kubernetes.io/name=hubble-ui

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=hubble-ui

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-ui

8383 k8s:app.kubernetes.io/instance=metrics-server

k8s:app.kubernetes.io/name=metrics-server

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=metrics-server

k8s:io.kubernetes.pod.namespace=kube-system

13988 k8s:app=iperf3-server

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

16039 k8s:app.kubernetes.io/name=hubble-relay

k8s:app.kubernetes.io/part-of=cilium

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=hubble-relay

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=hubble-relay

18821 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

25791 k8s:app=local-path-provisioner

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=local-path-storage

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=local-path-provisioner-service-account

k8s:io.kubernetes.pod.namespace=local-path-storage

44434 k8s:app=grafana

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=cilium-monitoring

47953 k8s:app=testing

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

50195 k8s:app=prometheus

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=cilium-monitoring

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=prometheus-k8s

k8s:io.kubernetes.pod.namespace=cilium-monitoring

57609 k8s:app=mediabot

k8s:class=mediabot

k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=default

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=default

k8s:io.kubernetes.pod.namespace=default

k8s:org=empire

57638 k8s:io.cilium.k8s.namespace.labels.kubernetes.io/metadata.name=kube-system

k8s:io.cilium.k8s.policy.cluster=kind-myk8s

k8s:io.cilium.k8s.policy.serviceaccount=coredns

k8s:io.kubernetes.pod.namespace=kube-system

k8s:k8s-app=kube-dns

k8s:study=8w

root@k8s-ctr:~# kubectl get pods

NAME READY STATUS RESTARTS AGE

mediabot 1/1 Running 0 72s 1/1 Running 0 75s

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s https://api.github.com | head -1

HTTP/2 200

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s --max-time 5 https://support.github.com | head -1

HTTP/2 302

mediabot 파드가 api.github.com에만 액세스하도록 허용하는 CiliumNetworkPolicy를 배포하여 확인해보겠습니다.

root@k8s-ctr:~# cat << EOF | kubectl apply -f -

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "fqdn"

spec:

endpointSelector:

matchLabels:

org: empire

class: mediabot

egress:

- toFQDNs:

- matchName: "api.github.com"

- toEndpoints:

- matchLabels:

"k8s:io.kubernetes.pod.namespace": kube-system

"k8s:k8s-app": kube-dns

toPorts:

EOF - matchPattern: "*"

ciliumnetworkpolicy.cilium.io/fqdn created

root@k8s-ctr:~# kubectl get cnp

NAME AGE VALID

fqdn 18s True

root@k8s-ctr:~# kubectl exec -it -n kube-system ds/cilium -- cilium policy selectors

SELECTOR LABELS USERS IDENTITIES

&LabelSelector{MatchLabels:map[string]string{any.class: mediabot,any.org: empire,k8s.io.kubernetes.pod.namespace: default,},MatchExpressions:[]LabelSelectorRequirement{},} default/fqdn 1 37240

root@k8s-ctr:~# cilium config view | grep -i dns

dnsproxy-enable-transparent-mode true

dnsproxy-socket-linger-timeout 10

hubble-metrics dns drop tcp flow port-distribution icmp httpV2:exemplars=true;labelsContext=source_ip,source_namespace,source_workload,destination_ip,destination_namespace,destination_workload,traffic_direction

tofqdns-dns-reject-response-code refused

tofqdns-enable-dns-compression true

tofqdns-endpoint-max-ip-per-hostname 1000

tofqdns-idle-connection-grace-period 0s

tofqdns-max-deferred-connection-deletes 10000

tofqdns-preallocate-identities true

tofqdns-proxy-response-max-delay 100ms

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s https://api.github.com | head -1

HTTP/2 200

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s --max-time 5 https://support.github.com | head -1

command terminated with exit code 28

root@k8s-ctr:~# cilium hubble port-forward&

hubble observe --pod mediabot

Sep 7 10:07:36.152: kube-system/coredns-674b8bbfcf-p856r:53 (ID:48269) <> default/mediabot (ID:37240) pre-xlate-rev TRACED (UDP)

Sep 7 10:07:36.152: kube-system/kube-dns:53 (world) <> default/mediabot (ID:37240) post-xlate-rev TRANSLATED (UDP)

Sep 7 10:07:36.153: default/mediabot:44734 (ID:37240) -> kube-system/coredns-674b8bbfcf-p856r:53 (ID:48269) to-endpoint FORWARDED (UDP)

Sep 7 10:07:36.154: default/mediabot:44734 (ID:37240) <> kube-system/coredns-674b8bbfcf-p856r (ID:48269) pre-xlate-rev TRACED (UDP)

Sep 7 10:07:36.154: default/mediabot:44734 (ID:37240) <> kube-system/coredns-674b8bbfcf-p856r (ID:48269) pre-xlate-rev TRACED (UDP)

Sep 7 10:07:36.154: default/mediabot:42884 (ID:37240) <> support.github.com:443 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

Sep 7 10:07:36.154: default/mediabot:44734 (ID:37240) <- kube-system/coredns-674b8bbfcf-p856r:53 (ID:48269) to-network FORWARDED (UDP)

Sep 7 10:07:36.154: default/mediabot:42884 (ID:37240) <> support.github.com:443 (world) Policy denied DROPPED (TCP Flags: SYN)

Sep 7 10:07:36.157: default/mediabot:33584 (ID:37240) -> kube-system/coredns-674b8bbfcf-8297z:53 (ID:48269) to-endpoint FORWARDED (UDP)

Sep 7 10:07:36.157: default/mediabot:33584 (ID:37240) <> kube-system/coredns-674b8bbfcf-8297z (ID:48269) pre-xlate-rev TRACED (UDP)

Sep 7 10:07:36.158: default/mediabot:33584 (ID:37240) <> kube-system/coredns-674b8bbfcf-8297z (ID:48269) pre-xlate-rev TRACED (UDP)

Sep 7 10:07:36.158: default/mediabot:33584 (ID:37240) <- kube-system/coredns-674b8bbfcf-8297z:53 (ID:48269) to-network FORWARDED (UDP)

Sep 7 10:07:36.167: default/mediabot:53861 (ID:37240) -> kube-system/coredns-674b8bbfcf-p856r:53 (ID:48269) to-endpoint FORWARDED (UDP)

Sep 7 10:07:36.167: default/mediabot:53861 (ID:37240) <> kube-system/coredns-674b8bbfcf-p856r (ID:48269) pre-xlate-rev TRACED (UDP)

Sep 7 10:07:36.168: default/mediabot:53861 (ID:37240) <> kube-system/coredns-674b8bbfcf-p856r (ID:48269) pre-xlate-rev TRACED (UDP)

Sep 7 10:07:36.186: default/mediabot:53861 (ID:37240) <- kube-system/coredns-674b8bbfcf-p856r:53 (ID:48269) to-network FORWARDED (UDP)

Sep 7 10:07:37.197: default/mediabot:42884 (ID:37240) <> support.github.com:443 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

Sep 7 10:07:37.197: default/mediabot:42884 (ID:37240) <> support.github.com:443 (world) Policy denied DROPPED (TCP Flags: SYN)

Sep 7 10:07:38.221: default/mediabot:42884 (ID:37240) <> support.github.com:443 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

Sep 7 10:07:38.221: default/mediabot:42884 (ID:37240) <> support.github.com:443 (world) Policy denied DROPPED (TCP Flags: SYN)

Sep 7 10:07:39.646: default/mediabot:56174 (ID:37240) <> support.github.com:443 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

Sep 7 10:07:39.646: default/mediabot:56174 (ID:37240) <> support.github.com:443 (world) Policy denied DROPPED (TCP Flags: SYN)

Sep 7 10:07:40.488: default/mediabot:57742 (ID:37240) <> support.github.com:443 (world) policy-verdict:none EGRESS DENIED (TCP Flags: SYN)

Sep 7 10:07:40.488: default/mediabot:57742 (ID:37240) <> support.github.com:443 (world) Policy denied DROPPED (TCP Flags: SYN)

# 로깅 활성화

root@k8s-ctr:~# kubectl logs -n kube-system -l k8s-app=kube-dns -f

maxprocs: Leaving GOMAXPROCS=4: CPU quota undefined

.:53

[INFO] plugin/reload: Running configuration SHA512 = 1b226df79860026c6a52e67daa10d7f0d57ec5b023288ec00c5e05f93523c894564e15b91770d3a07ae1cfbe861d15b37d4a0027e69c546ab112970993a3b03b

CoreDNS-1.12.0

linux/arm64, go1.23.3, 51e11f1

[WARNING] plugin/health: Local health request to "http://:8080/health" failed: Get "http://:8080/health": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

[WARNING] plugin/health: Local health request to "http://:8080/health" took more than 1s: 1.351010835s

.:53

[INFO] plugin/reload: Running configuration SHA512 = 1b226df79860026c6a52e67daa10d7f0d57ec5b023288ec00c5e05f93523c894564e15b91770d3a07ae1cfbe861d15b37d4a0027e69c546ab112970993a3b03b

CoreDNS-1.12.0

linux/arm64, go1.23.3, 51e11f1

[WARNING] plugin/health: Local health request to "http://:8080/health" failed: Get "http://:8080/health": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

[WARNING] plugin/health: Local health request to "http://:8080/health" failed: Get "http://:8080/health": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

[WARNING] plugin/health: Local health request to "http://:8080/health" failed: Get "http://:8080/health": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

[INFO] plugin/kubernetes: pkg/mod/k8s.io/client-go@v0.31.2/tools/cache/reflector.go:243: watch of *v1.Service ended with: an error on the server ("unable to decode an event from the watch stream: http2: client connection lost") has prevented the request from succeeding

[INFO] plugin/kubernetes: pkg/mod/k8s.io/client-go@v0.31.2/tools/cache/reflector.go:243: watch of *v1.Namespace ended with: an error on the server ("unable to decode an event from the watch stream: http2: client connection lost") has prevented the request from succeeding

[INFO] plugin/kubernetes: pkg/mod/k8s.io/client-go@v0.31.2/tools/cache/reflector.go:243: watch of *v1.EndpointSlice ended with: an error on the server ("unable to decode an event from the watch stream: http2: client connection lost") has prevented the request from succeeding

# cilium 파드 및 단축키 지정

root@k8s-ctr:~# export CILIUMPOD0=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-ctr -o jsonpath='{.items[0].metadata.name}')

root@k8s-ctr:~# export CILIUMPOD1=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w1 -o jsonpath='{.items[0].metadata.name}')

root@k8s-ctr:~# export CILIUMPOD2=$(kubectl get -l k8s-app=cilium pods -n kube-system --field-selector spec.nodeName=k8s-w2 -o jsonpath='{.items[0].metadata.name}')

root@k8s-ctr:~# echo $CILIUMPOD0 $CILIUMPOD1 $CILIUMPOD2

## 단축키(alias) 지정

root@k8s-ctr:~# alias c0="kubectl exec -it $CILIUMPOD0 -n kube-system -c cilium-agent -- cilium"

root@k8s-ctr:~# alias c1="kubectl exec -it $CILIUMPOD1 -n kube-system -c cilium-agent -- cilium"

root@k8s-ctr:~# alias c2="kubectl exec -it $CILIUMPOD2 -n kube-system -c cilium-agent -- cilium"

##

root@k8s-ctr:~# c0 fqdn cache list

root@k8s-ctr:~# c1 fqdn cache list

root@k8s-ctr:~# c2 fqdn cache list

Endpoint Source FQDN TTL ExpirationTime IPs

553 connection api.github.com. 0 2025-09-07T10:15:25.047Z 20.200.245.245

553 connection support.github.com. 0 2025-09-07T10:15:25.047Z 185.199.108.133

553 connection support.github.com. 0 2025-09-07T10:15:25.047Z 185.199.110.133

553 connection support.github.com. 0 2025-09-07T10:15:25.047Z 185.199.109.133

553 connection support.github.com. 0 2025-09-07T10:15:25.047Z 185.199.111.133

root@k8s-ctr:~# c0 fqdn names

root@k8s-ctr:~# c1 fqdn names

root@k8s-ctr:~# c2 fqdn names

{

"DNSPollNames": null,

"FQDNPolicySelectors": [

{

"regexString": "^api[.]github[.]com[.]$",

"selectorString": "MatchName: api.github.com, MatchPattern: "

}

]

}

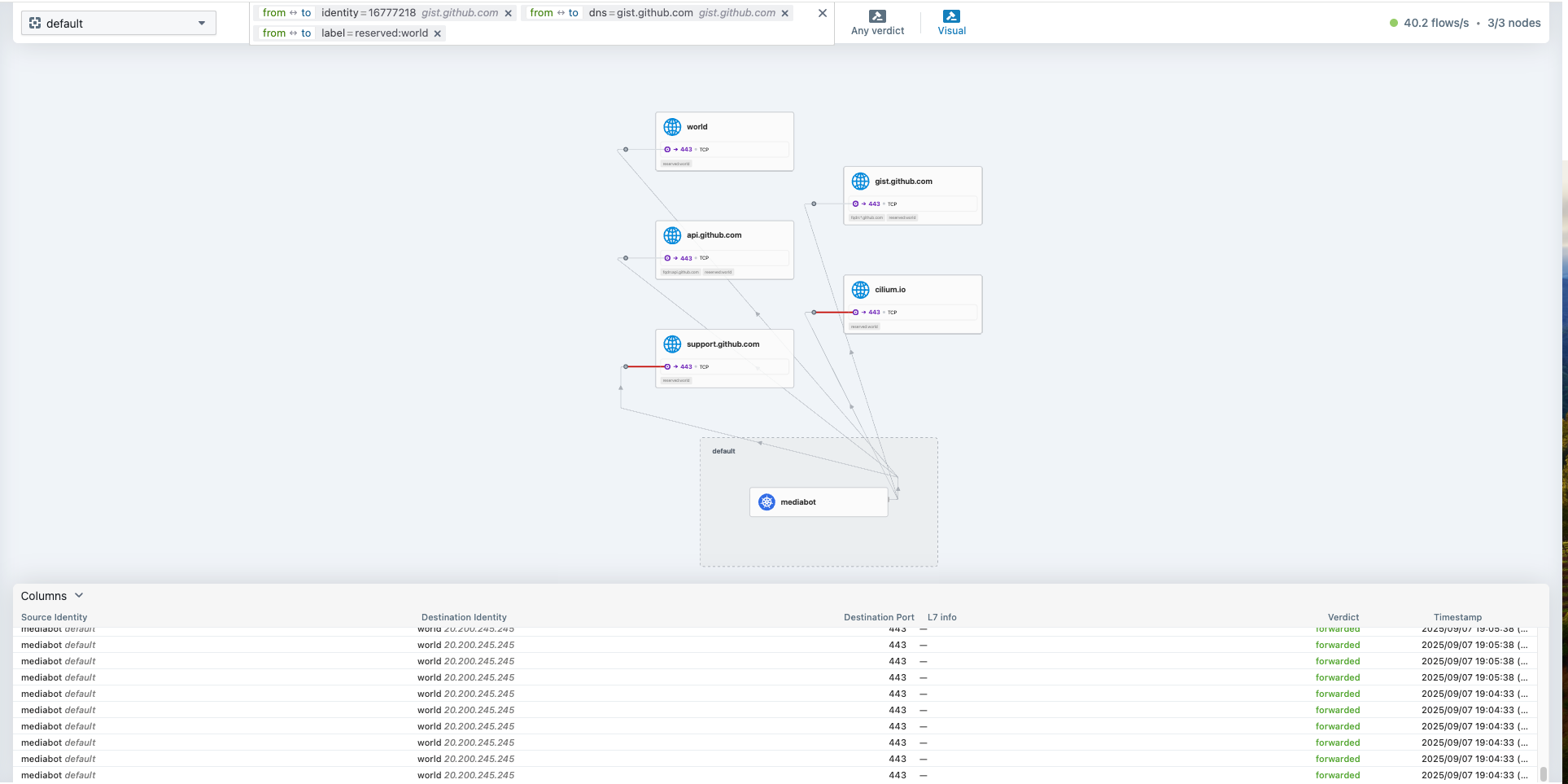

실습 2) DNS Egress 적용 - 모든 GitHub 하위 도메인에 액세스

root@k8s-ctr:~# kubectl delete cnp fqdn

root@k8s-ctr:~# c1 fqdn cache clean -f

FQDN proxy cache cleared

root@k8s-ctr:~# c2 fqdn cache clean -f

FQDN proxy cache cleared

root@k8s-ctr:~# cat dns-pattern.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "fqdn"

spec:

endpointSelector:

matchLabels:

org: empire

class: mediabot

egress:

- toFQDNs:

- matchName: "*.github.com"

- toEndpoints:

- matchLabels:

"k8s:io.kubernetes.pod.namespace": kube-system

"k8s:k8s-app": kube-dns

toPorts:

- ports:

- port: "53"

protocol: ANY

rules:

dns:

- matchPattern: "*"

root@k8s-ctr:~# kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.18.1/examples/kubernetes-dns/dns-pattern.yaml

root@k8s-ctr:~# c1 fqdn names

{

"DNSPollNames": null,

"FQDNPolicySelectors": []

}

root@k8s-ctr:~# c2 fqdn names

{

"DNSPollNames": null,

"FQDNPolicySelectors": [

{

"regexString": "^[-a-zA-Z0-9_]*[.]github[.]com[.]$",

"selectorString": "MatchName: , MatchPattern: *.github.com"

}

]

}

root@k8s-ctr:~# c1 fqdn cache list

Endpoint Source FQDN TTL ExpirationTime IPs

root@k8s-ctr:~# c2 fqdn cache list

Endpoint Source FQDN TTL ExpirationTime IPs

root@k8s-ctr:~# kubectl get cnp

NAME AGE VALID

fqdn 66s True

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s https://support.github.com | head -1

HTTP/2 302

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s https://gist.github.com | head -1

HTTP/2 302

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s --max-time 5 https://github.com | head -1

HTTP/2 200

*.github.com 하위 도메인에 대해 통신 되는 것을 확인할 수 있습니다.

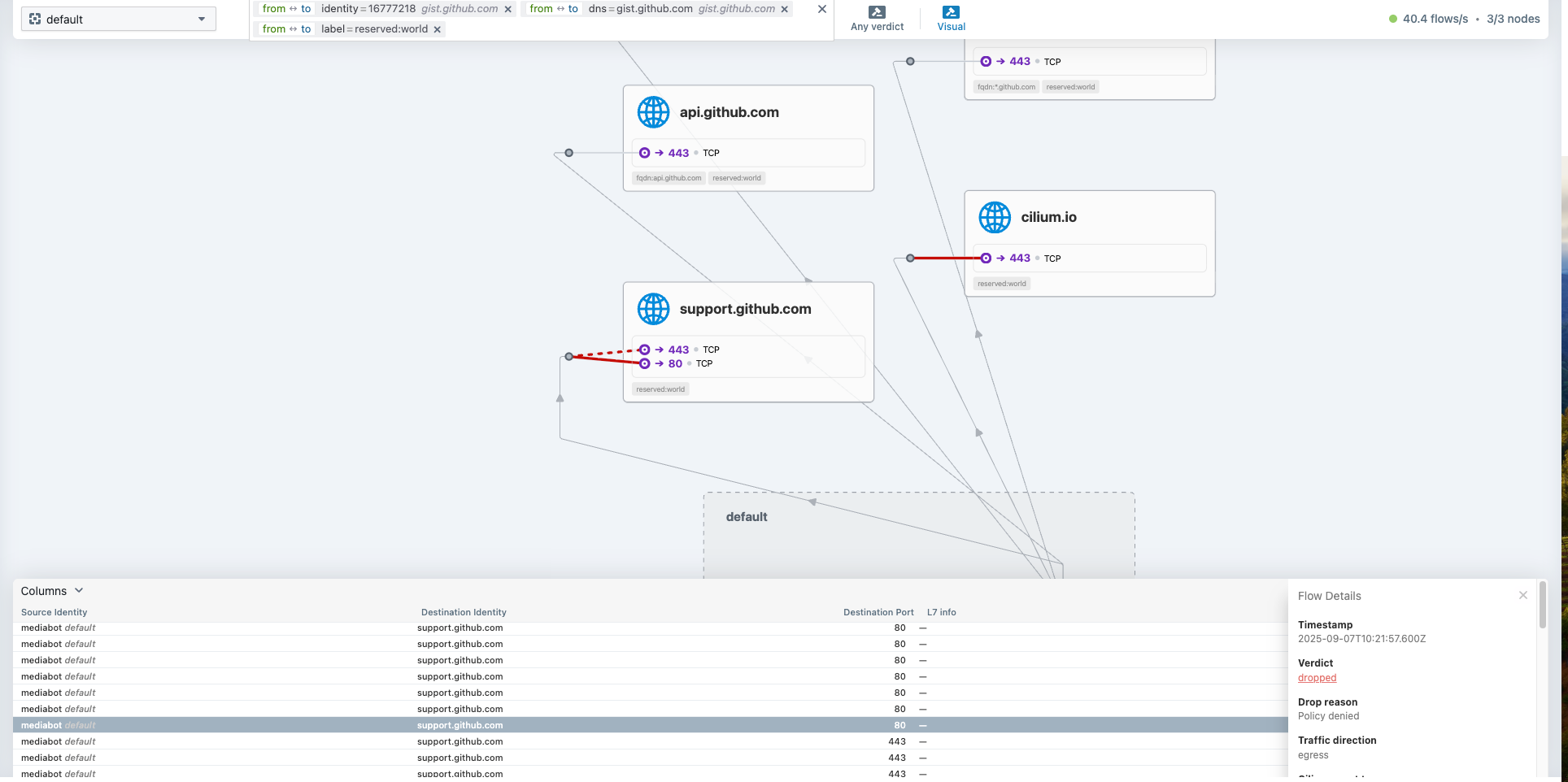

실습 3) DNS Egress 적용 - DNS, Port 조합 적용

root@k8s-ctr:~# kubectl delete cnp fqdn

ciliumnetworkpolicy.cilium.io "fqdn" deleted

root@k8s-ctr:~# dns-port.yaml

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

metadata:

name: "fqdn"

spec:

endpointSelector:

matchLabels:

org: empire

class: mediabot

egress:

- toFQDNs:

- matchPattern: "*.github.com"

toPorts:

- ports:

- port: "443"

protocol: TCP

- toEndpoints:

- matchLabels:

"k8s:io.kubernetes.pod.namespace": kube-system

"k8s:k8s-app": kube-dns

toPorts:

- ports:

- port: "53"

protocol: ANY

rules:

dns:

- matchPattern: "*"

root@k8s-ctr:~# kubectl apply -f https://raw.githubusercontent.com/cilium/cilium/1.18.1/examples/kubernetes-dns/dns-port.yaml

ciliumnetworkpolicy.cilium.io/fqdn created

root@k8s-ctr:~# c1 fqdn names

{

"DNSPollNames": null,

"FQDNPolicySelectors": []

}

root@k8s-ctr:~# c2 fqdn names

{

"DNSPollNames": null,

"FQDNPolicySelectors": [

{

"regexString": "^[-a-zA-Z0-9_]*[.]github[.]com[.]$",

"selectorString": "MatchName: , MatchPattern: *.github.com"

}

]

}

root@k8s-ctr:~# c1 fqdn cache list

Endpoint Source FQDN TTL ExpirationTime IPs

root@k8s-ctr:~# c2 fqdn cache list

Endpoint Source FQDN TTL ExpirationTime IPs

553 connection support.github.com. 0 2025-09-07T10:32:18.053Z 185.199.109.133

553 connection gist.github.com. 0 2025-09-07T10:32:18.053Z 20.200.245.247

553 connection github.com. 0 2025-09-07T10:32:18.053Z 20.200.245.247

553 connection cilium.io. 0 2025-09-07T10:32:18.053Z 104.198.14.52

553 connection support.github.com. 0 2025-09-07T10:32:18.053Z 185.199.111.133

553 connection support.github.com. 0 2025-09-07T10:32:18.053Z 185.199.108.133

553 connection support.github.com. 0 2025-09-07T10:32:18.053Z 185.199.110.133

root@k8s-ctr:~# c1 fqdn cache clean -f

FQDN proxy cache cleared

root@k8s-ctr:~# c2 fqdn cache clean -f

FQDN proxy cache cleared

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s https://support.github.com | head -1

HTTP/2 302

root@k8s-ctr:~# kubectl exec mediabot -- curl -I -s --max-time 5 http://support.github.com | head -1

command terminated with exit code 28

80포트에 대해서는 dropped 되고, 443 포트에 대해서는 통신 되는 것을 확인할 수 있습니다.

'스터디 > Cilium' 카테고리의 다른 글

| [Cilium] Tetragon (3) | 2025.09.07 |

|---|---|

| [Cilium] Cilium Performance (1) | 2025.08.31 |

| [Cilium] K8s Performance with Kube-burner (1) | 2025.08.31 |

| [Cilium] Gateway API (2) | 2025.08.24 |

| [Cilium] Cilium Ingress (4) | 2025.08.23 |